Welcome to the vRealize Automation 7.2 Detailed Implementation VIDEO Guide. This is a collection of all the videos making up the full vRealize Automation 7.2 Detailed Implementation Guide.

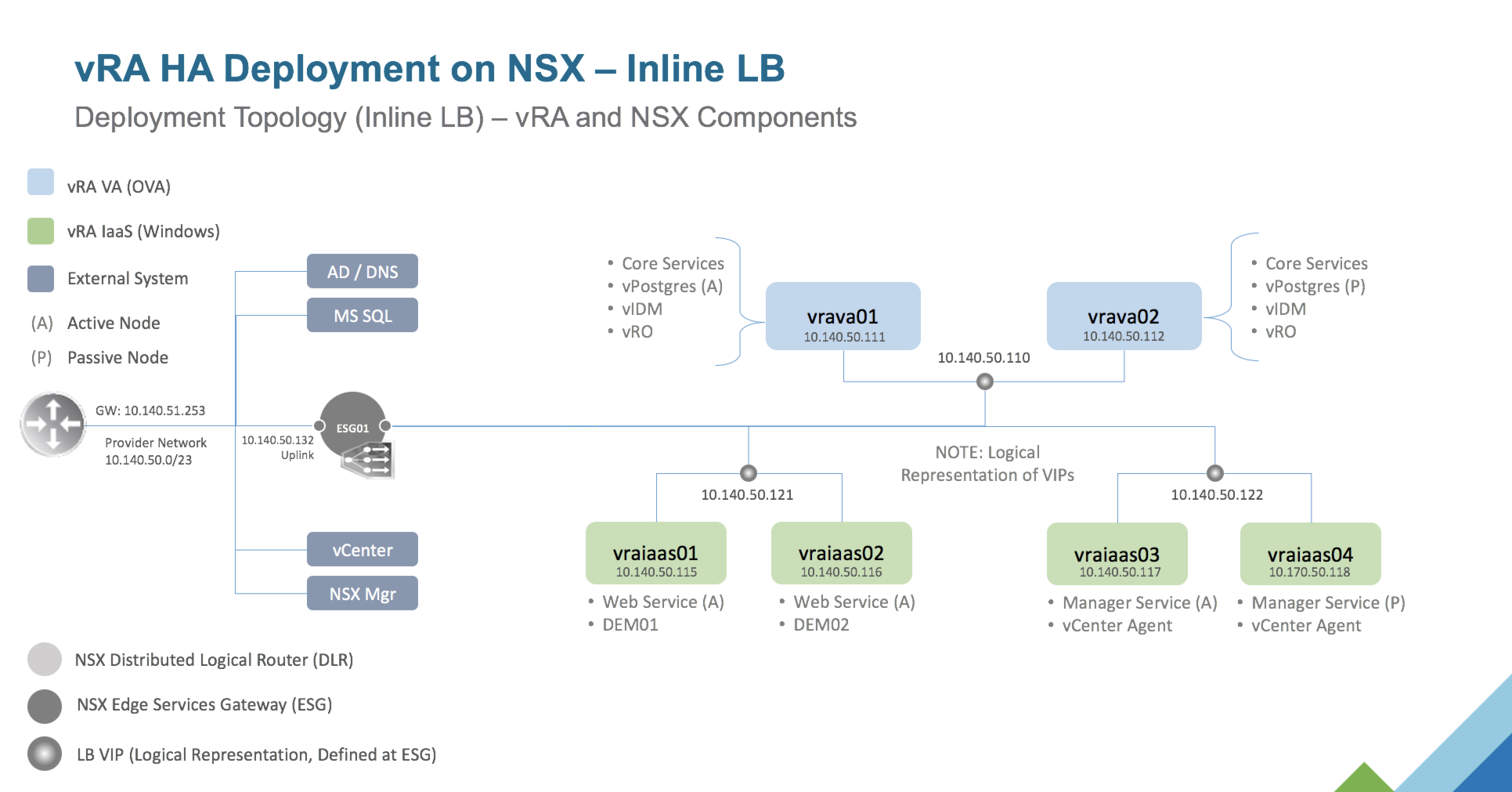

The guide (and these videos) was put together to help you deploy and configure a highly-available, production-worthy vRealize Automation 7.2 distributed environment, complete with SDDC integration (e.g. VSAN, NSX), extensibility examples and ecosystem integrations. The design assumes VMware NSX will provide the load balancing capabilities and includes details on deploying and configuring NSX from from scratch to deliver these capabilities.

High-Level Overview

- Production deployments of vRealize Automation (vRA) should be configured for high availability (HA)

- The vRA Deployment Wizard supports Minimal (staging / POC) and Enterprise (distributed / HA) for production-ready deployments, per the Reference Architecture

- Enterprise deployments require external load balancing services to support high availability and load distribution for several vRA services

- VMware validates (and documents) distributed deployments with F5 and NSX load balancers

- This document provides a sample configuration of a vRealize Automation 7.2 Distributed HA Deployment Architecture using VMware NSX for load balancing

Implementation Overview

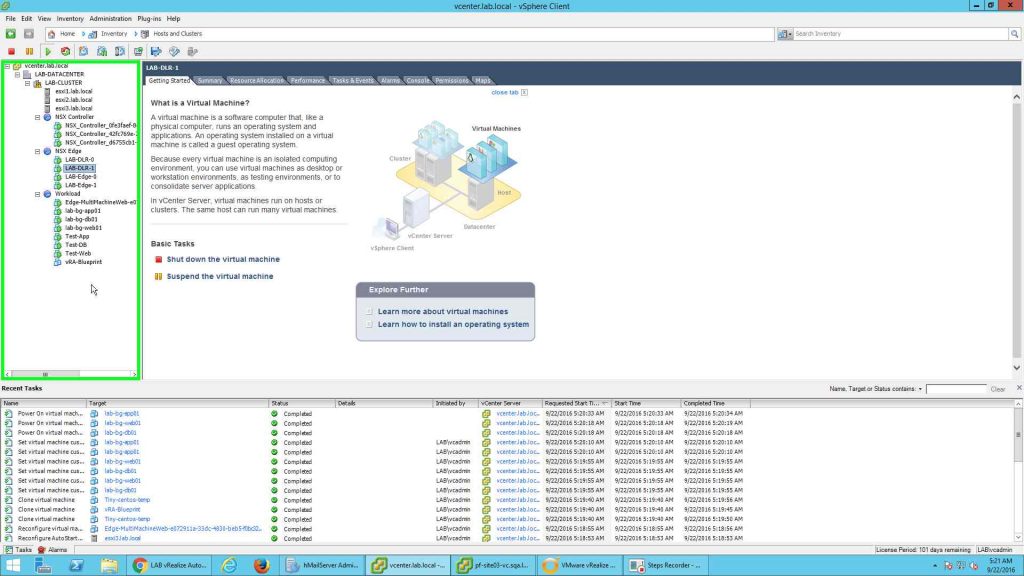

To set the stage, here’s a high-level view of the vRA nodes that will be deployed in this exercise. While a vRA POC can typically be done with 2 nodes (vRA VA + IaaS node on Windows), a distributed deployment can scale to anywhere from 4 (min) to a dozen or more components. This will depend on the expected scale, primarily driven by user access and concurrent operations. We will be deploying six (6) nodes in total – two (2) vRA appliances and four (4) Windows machines to support vRA’s IaaS services. This is equivalent to somewhere between a “small” and “medium” enterprise deployment. It’s a good balance of scale and supportability starting point.

![]()

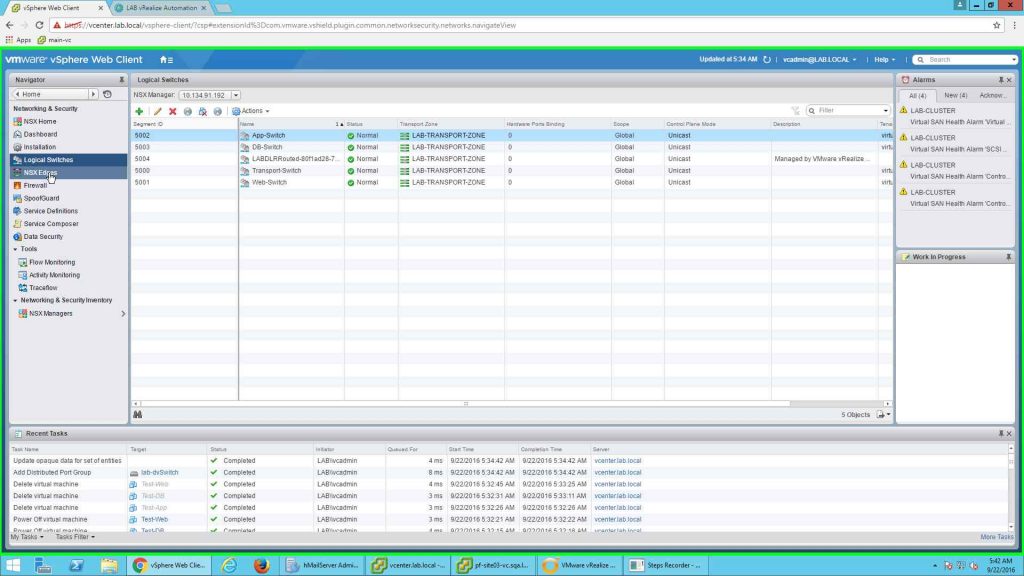

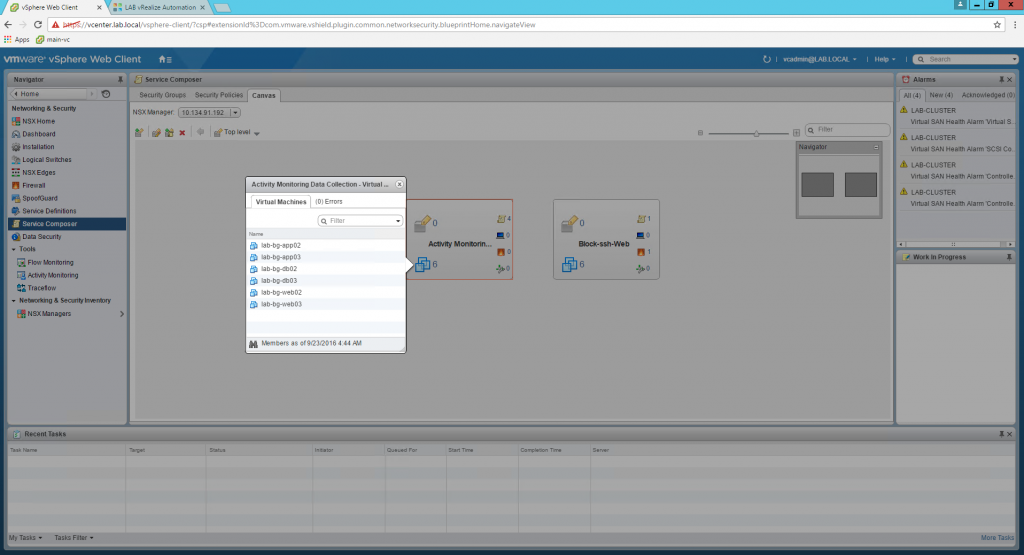

We will be leveraging VMware NSX in this implementation to provide the load balancing services for the vRA deployment as well as integrating into vRA for application-centric network and security. Before any of this is possible, we must deploy NSX to the vSphere cluster, prepare the hosts, and configure logical network services. The guide assumes the use of NSX for these services, but this is NOT a requirement. A distributed installation of vRA can be accomplished with most load balancers. VMware certifies NSX, F5, and NetScaler.

(You can skip this section if you do not plan on using NSX in your environment)

The vRA virtual appliance (OVA) is downloaded from vmware.com and deployed to a vSphere environment. In a distributed deployment, you will deploy both primary and secondary nodes ahead of kicking off the deployment wizard.

The VA also includes the latest IaaS installers, including the required management agent (that will be covered in the next section).

vRA’s IaaS engine is a .net-based application that is installed on a number of dedicated Windows machines. In the old days, the IaaS components were manually installed, configured and registered with the vRA appliance(s). This included manual installation of many prerequisites. The effort was quite tedious and error-prone, especially in a large distributed environment.

In vRA 7.0 and higher, the installation and configuration of system prerequisites and IaaS components has been fully automated by the Deployment Wizard. But prior to kicking off the wizard, the vRA Management Agent needs to be installed on each IaaS host. Once installed, the host is registered with the primary virtual appliance and made available for IaaS installation during the deployment. While the Deployment Wizard will automatically push most of the prerequisites (after a prerequisite check), you have the option to install any or all of the prereqs ahead of time. However, the wizard’s success rate has improved greatly and is the preferred method for most environments.

The Deployment Wizard is invoked by logging into the primary VA’s Virtual Appliance Management Interface (VAMI) using the configured root account. Once logged in, the admin is immediately presented with the new Deployment Wizard UI. The wizard will provide a choice of a minimal (POC, small) or enterprise (HA, distributed) deployment then, based on the desired deployment type, will walk you through a series of configuration details needed for the various working parts of vRA, including all the windows-based IaaS components and dependencies. For HA deployments, all the core components are automatically clustered and made highly-available based on these inputs.

In both Minimal and Enterprise deployments, the IaaS components (Manager Service, Web Service, DEMs, and Agents) are automatically pushed to available windows IaaS servers made available to the installer thanks to the management agent.

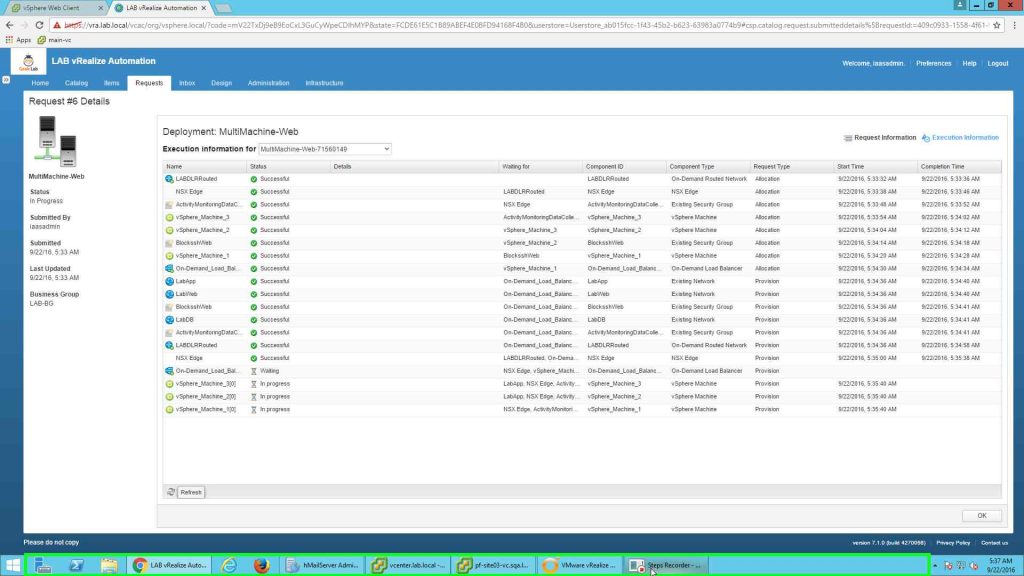

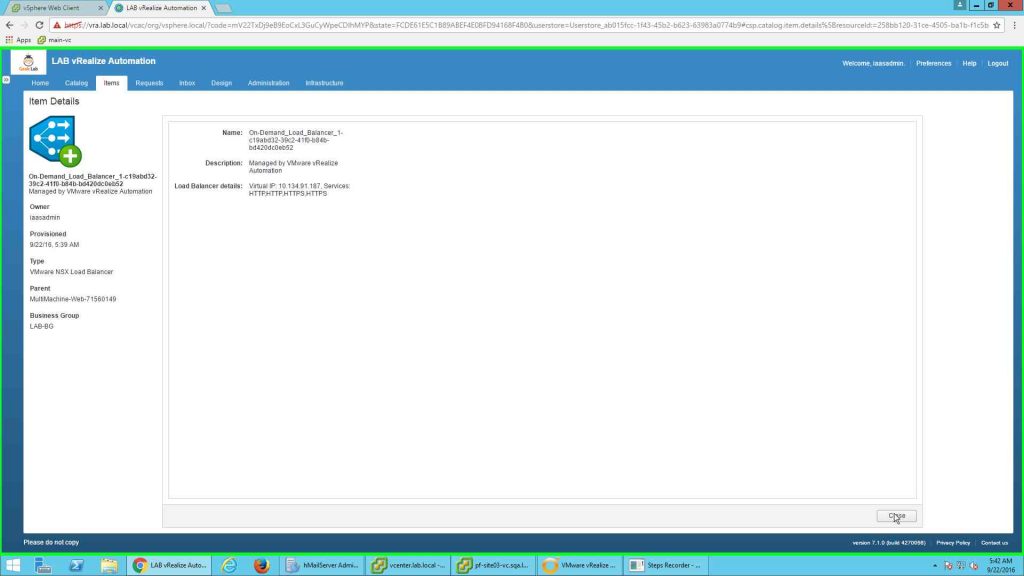

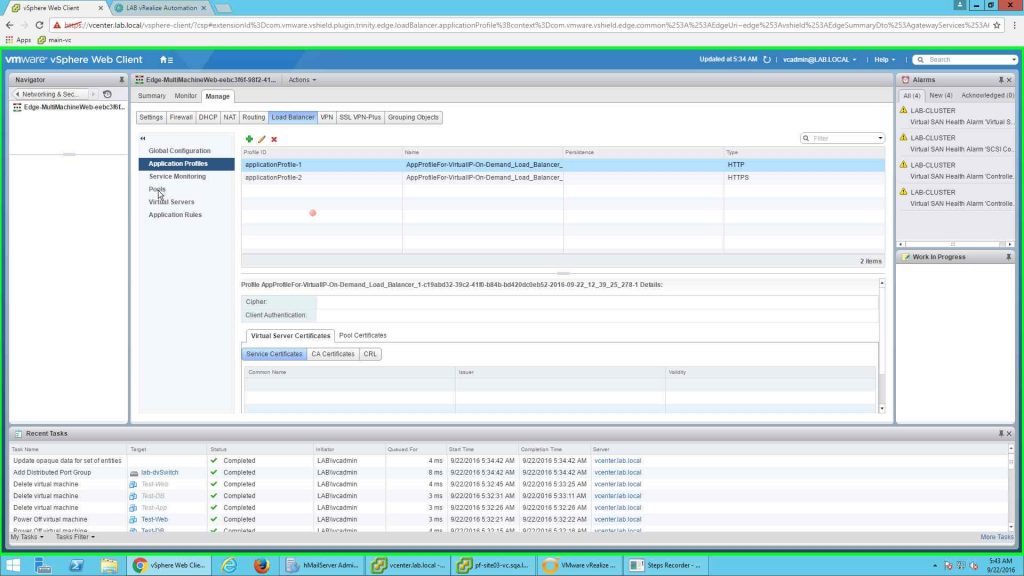

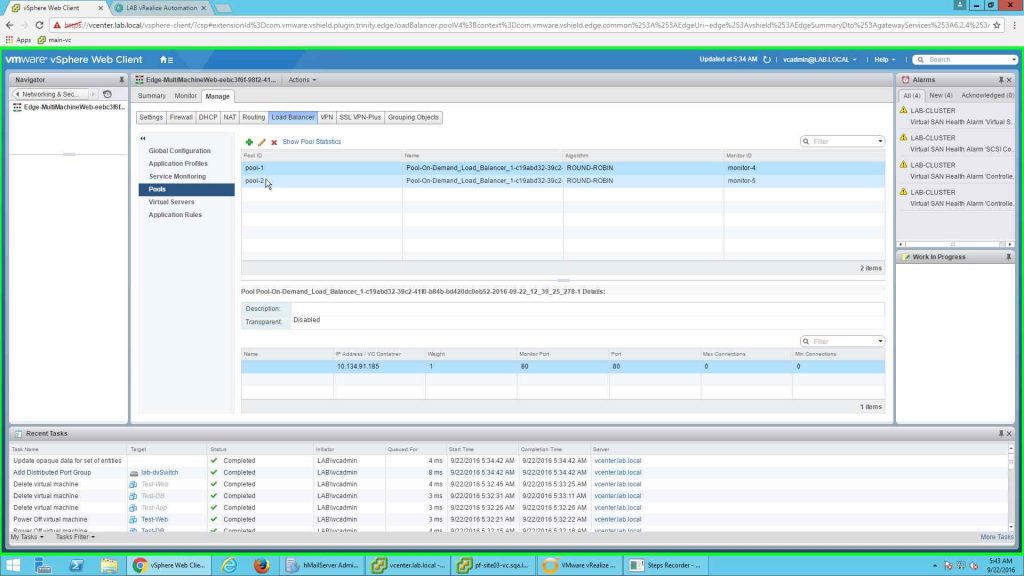

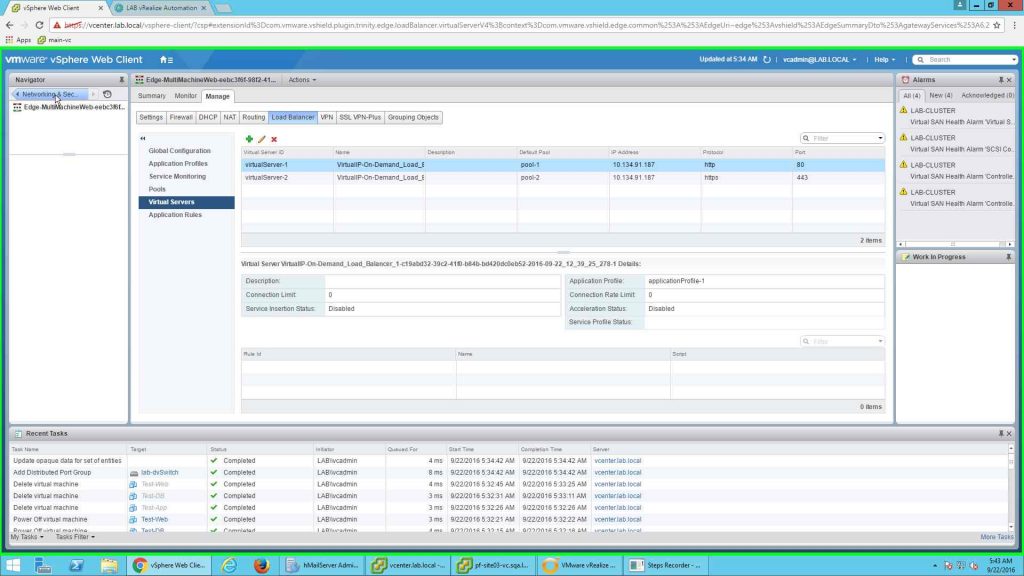

Next we’ll be configuring load balancing and high availability policies for the distributed components. An NSX Edge Service Gateway (ESG) will be providing the load balancing and availability services to vRA as an infrastructure service. vRA supports In-Line and One-Arm load balancing policies. This implementation will be based on an In-Line configuration, where the vRA nodes and the load balancer VIPs are on the same subnet.

(If you do not plan on using NSX for HA services, you can skip this configuration)

Next up, we’ll be completing the initial tenant configuration. This involves configuring an authentication directory in the default tenant (vsphere.local), syncing with the domain, and getting some accounts added to various vRA roles.

08, IaaS Fabric Configuration

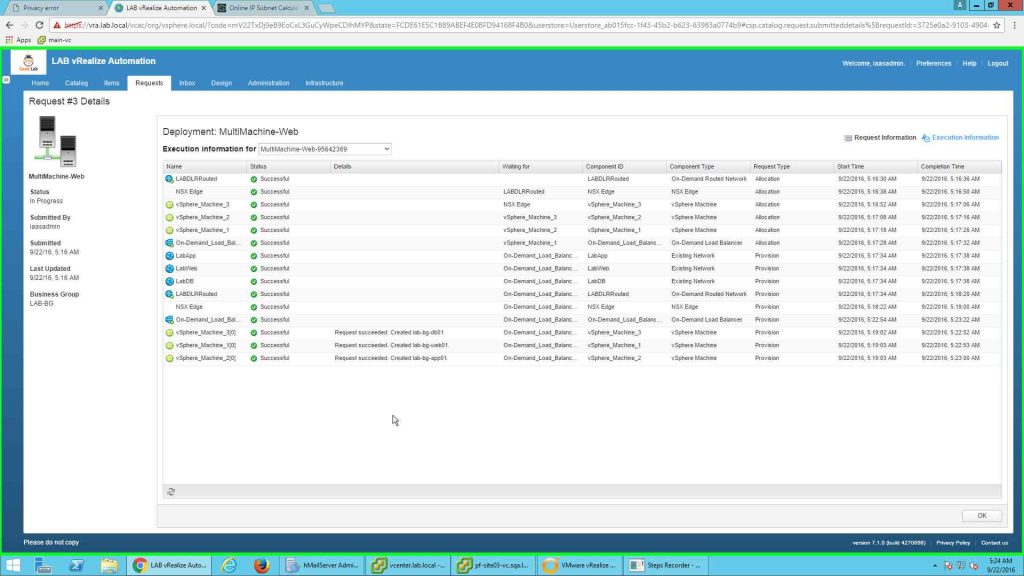

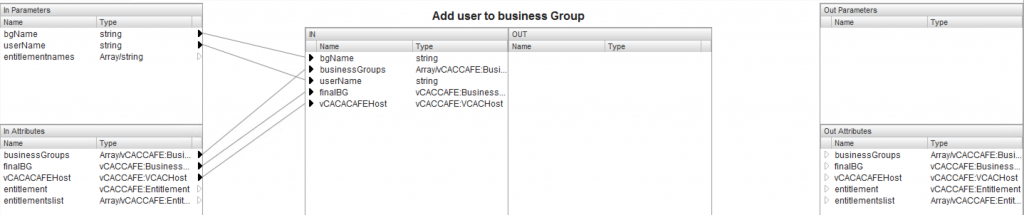

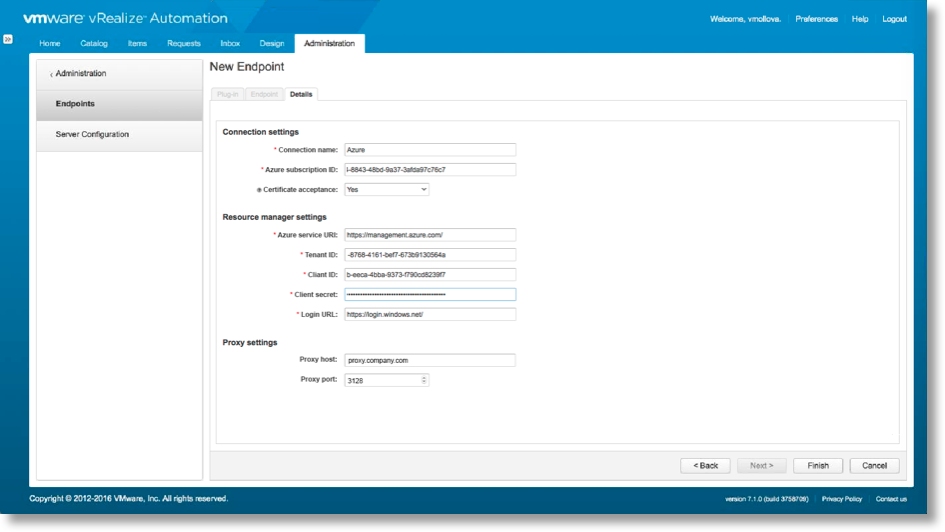

The IaaS Fabric is made up of all the infrastructure components that are configured to provide aggregate resources to provisioned machines and applications. vRA’s IaaS Fabric is made up of several logical constructs that are configured to identify and collect private and public cloud resources (Endpoints), aggregate those resources into manageable segments (Fabric Groups), and sub-allocate hybrid resources (Reservations) to the consumers (Business Groups).

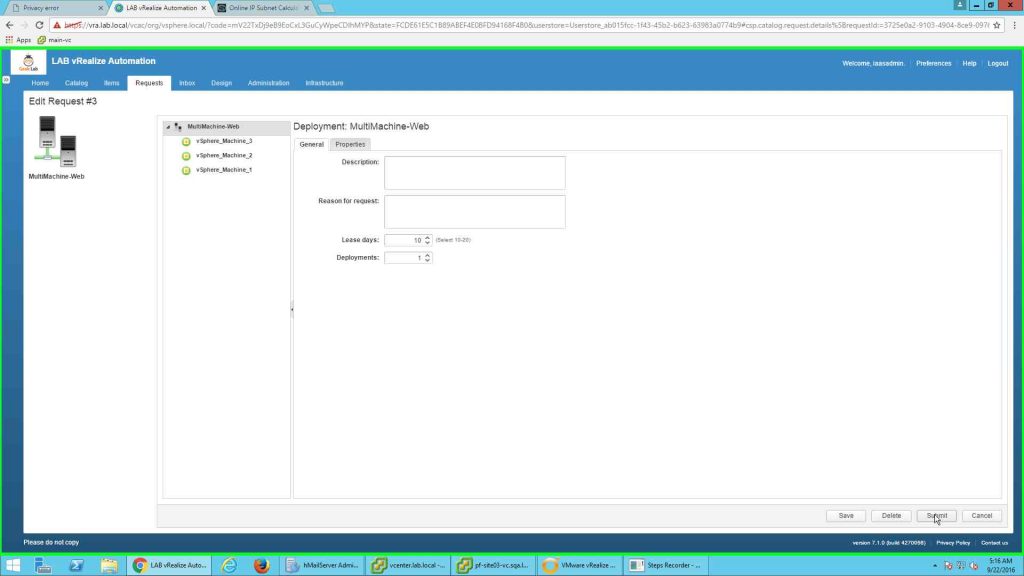

09, Creating IaaS Blueprints

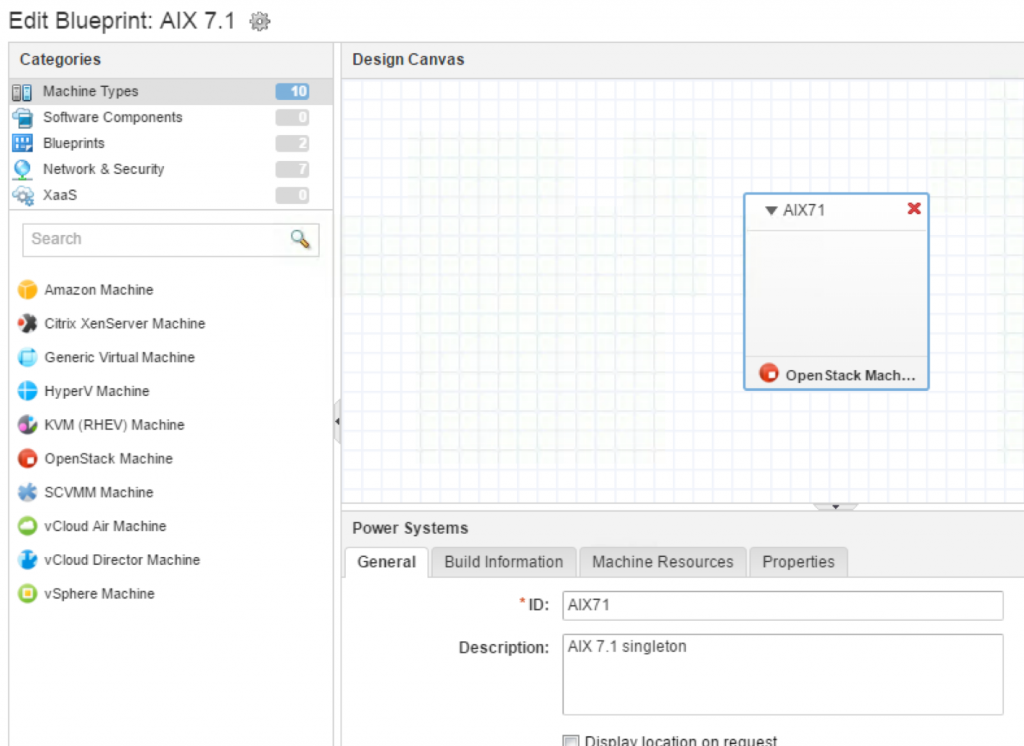

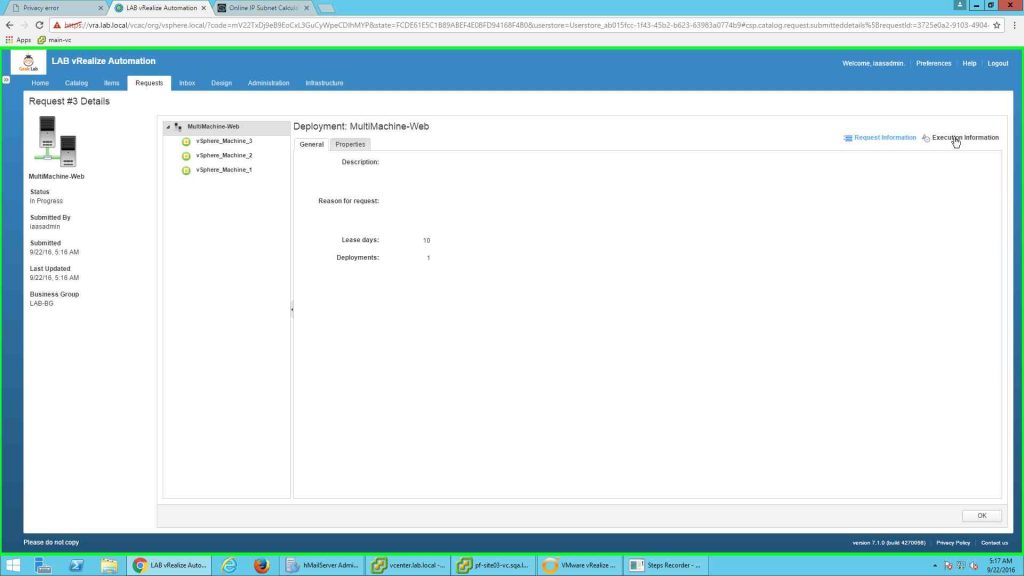

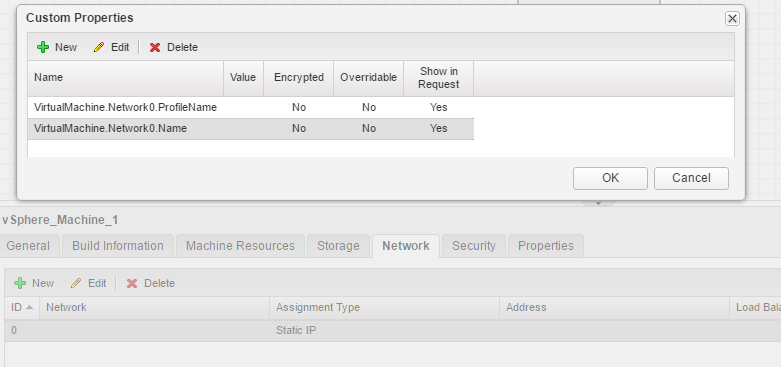

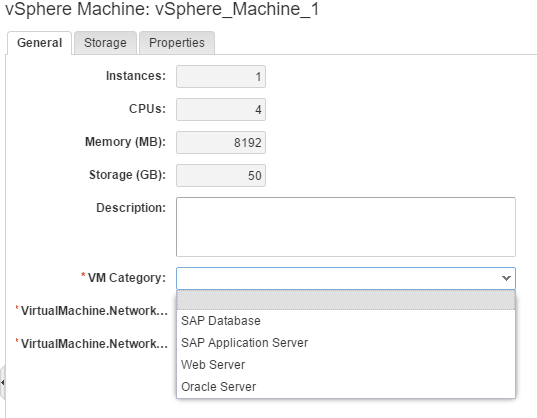

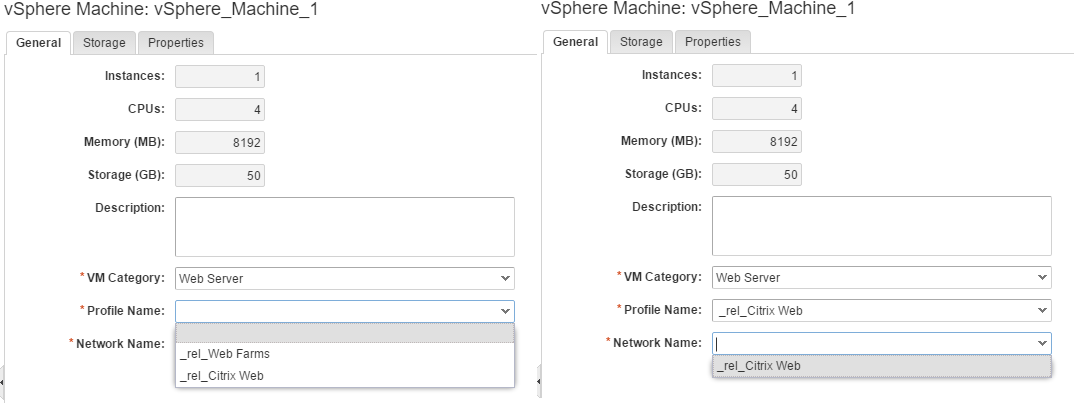

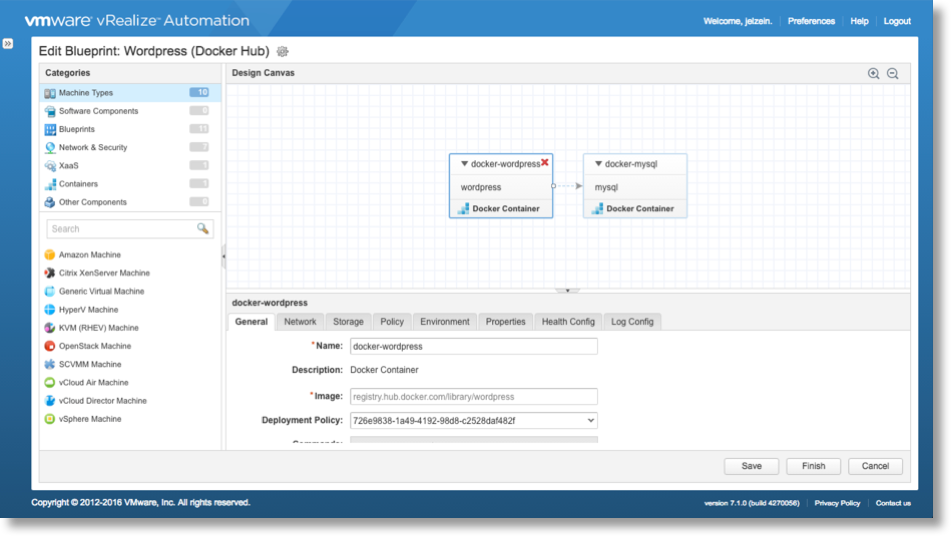

A Blueprint is a logical definition of a given application or service and must be create prior to publishing that service in the service catalog. That includes all traditional IaaS (Windows / Linux / Multi-Tier Apps), containerized applications, and XaaS (anything as a service). An IaaS blueprint also defines the resource configuration logic for the included service(s), including CPU, memory, storage, and network resource allocations for a given machine component and defines the workflow that will be used to provision the machine(s) at request time, depending on the desired outcome.

The Converged Blueprint (CBP) Designer is a single, converged designer for all blueprint authoring. Blueprints are now built on a dynamic drag-n-drop design canvas, allowing admins to choose any supported components, drag them on to the canvas, build dependencies, and publish the finished product to the catalog. Components include machine shells for all the supported platforms, software components, endpoint networks, NSX-provided networks, XaaS components, and even other blueprints that have already been published (yes, nested blueprints). Once dragged over, the admin can build the necessarily logic and any needed integration for each component of that particular service.

In this module, we will be creating a couple example vSphere Blueprints — 1 x Windows 2012 R2, 1 x CentOS 6.7 — and preparing them to be published in the catalog (next section). Later, we’ll be adding additional configurations to each of blueprints for more advanced use cases.

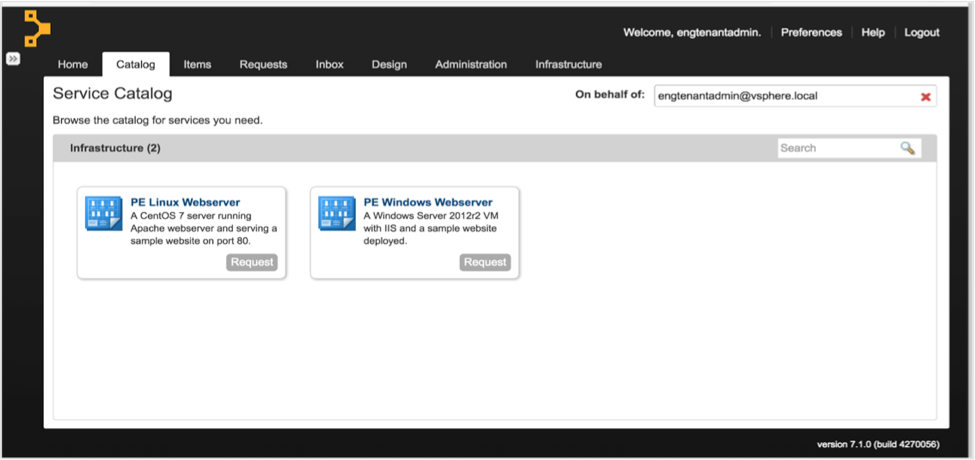

10, Catalog Management

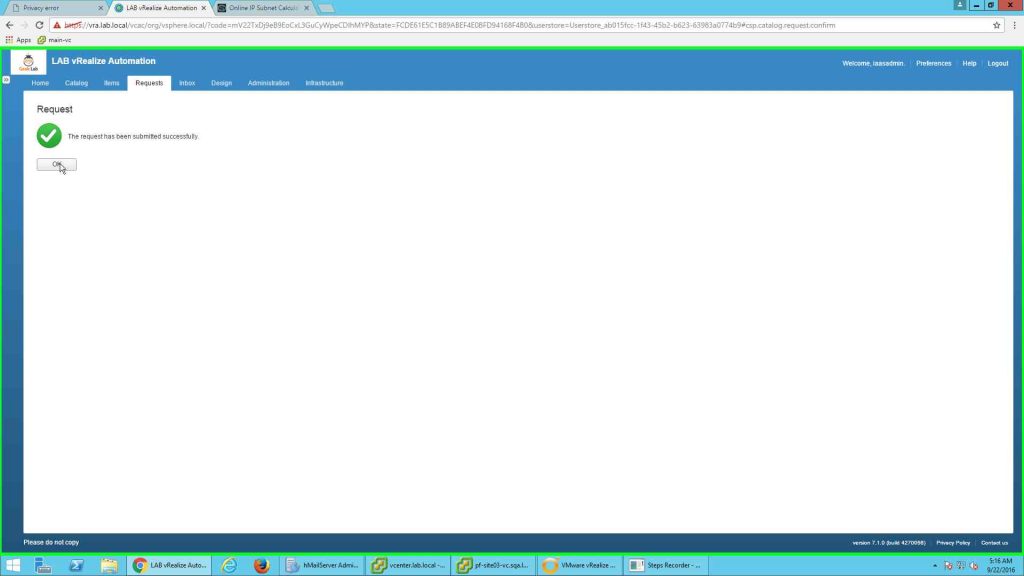

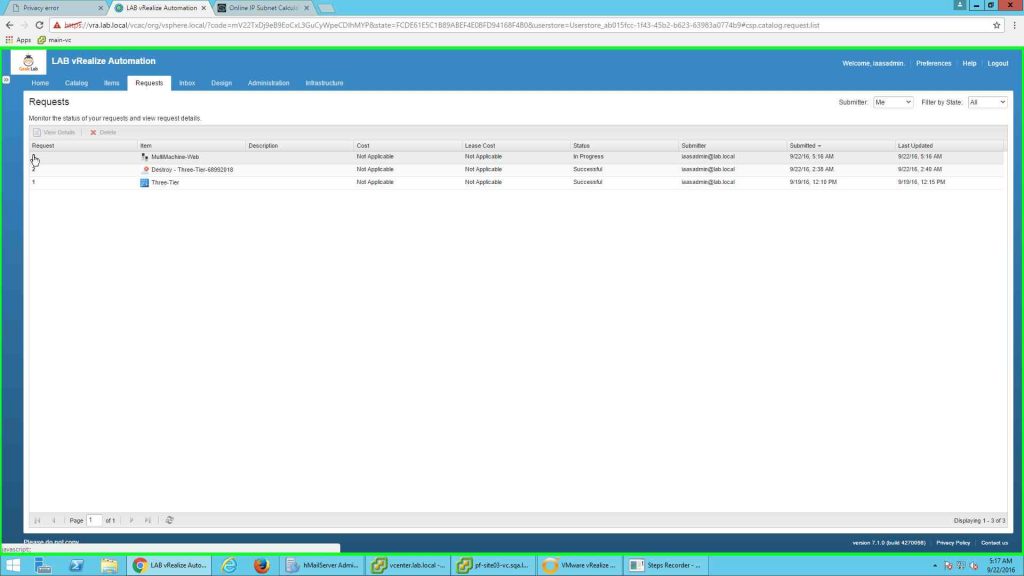

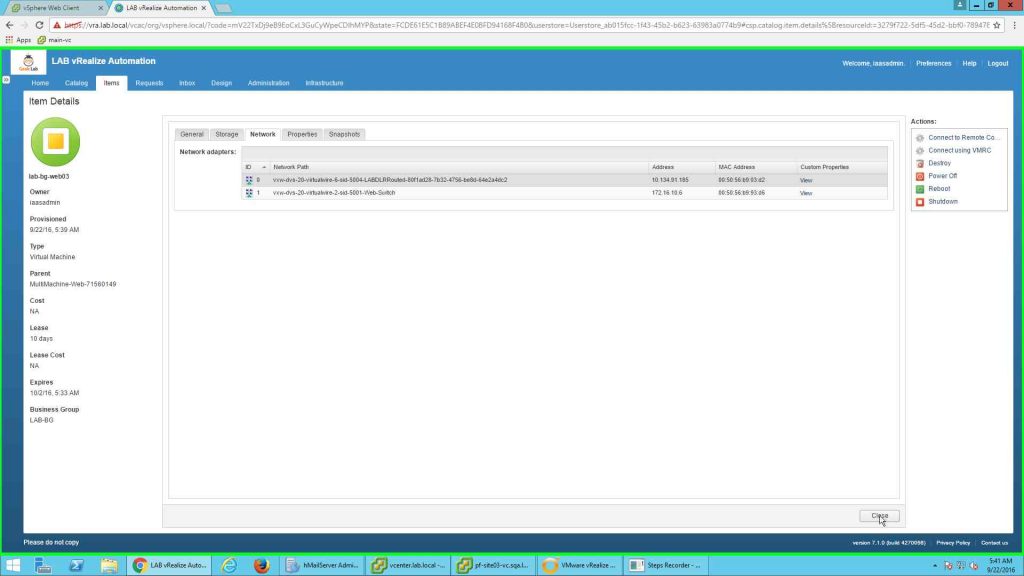

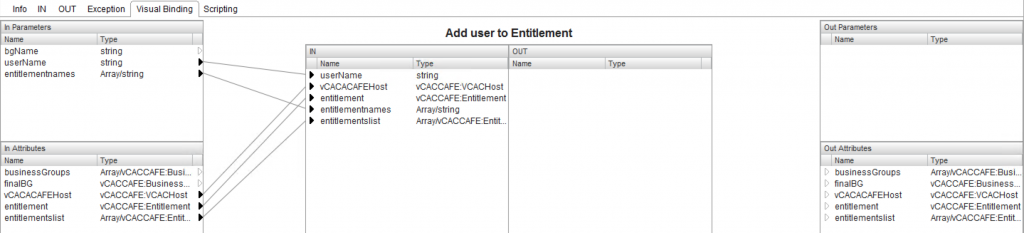

Once the blueprints have been created and published, you make them available for consumption in the unified Catalog. The Catalog is the self-service component of vRA, which provides any number of services to consumers. But before that can happen, you must determine which users or groups (e.g. Business Group users) will have access to each catalog item. vRA uses a rich set of policies to provide granularity that ensures services are only available to users that are specifically entitled to that particular service (or action).

Catalog Management consists of creating Services (e.g. categories), assigning published catalog items to a Service, and entitling one or more Business Groups users to the item(s).

11, Approval Policies

Approval policies are optionally created to add governance and additional controls to any and all services. vRA provides a significant amount of granularity for triggering approval policies based on the catalog item, service type, component configuration, lifecycle state, or even based on the existence of a particular item. Once created, active approval policies are applied to Services, individual Catalog Items, and/or Actions in the Entitlements section.

Approval Policies can be triggered at request time (PRE) or just prior to delivering the service to the consumer (POST)…or a combination of the two. For example, manager’s approval can be required at request time (before provisioning begins) and another approval can be required for final inspection prior to making the service available to the requesting consumer. For traditional IaaS machines, a policy can also include options that allow the approver to modify the request prior to approving (e.g. mem, cpu configuration). At provisioning time, the approver is notified of the pending request. Once approved, the request moves forward. If it is rejected, the request is canceled and the user is notified of the rejection.

In this section, we will create three approval policies — one that is triggered based on configurations (cpu count), one that requires a Business Group manager’s approval and one that is triggered when a particular day-2 action is invoked.

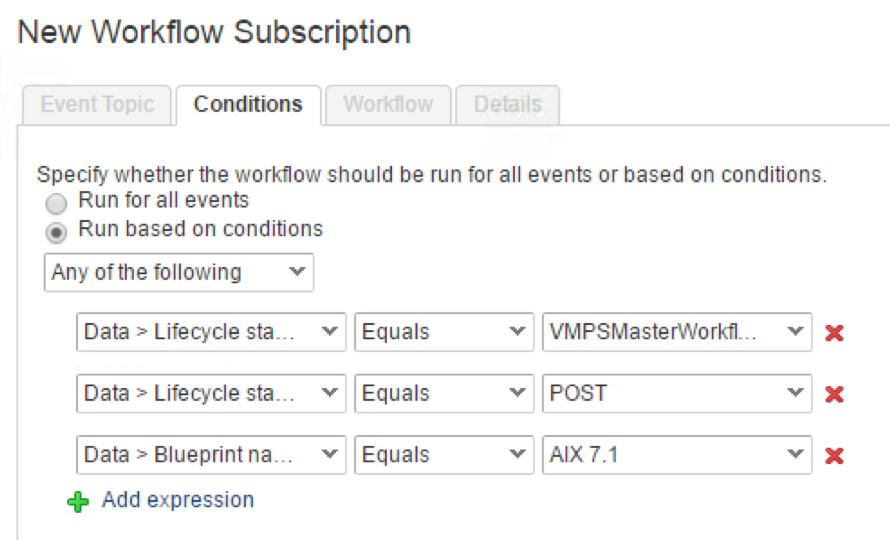

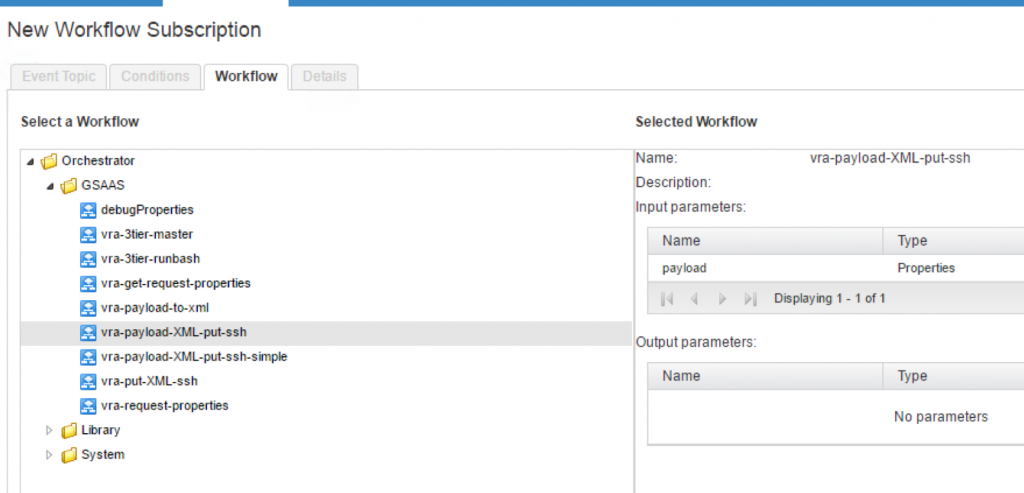

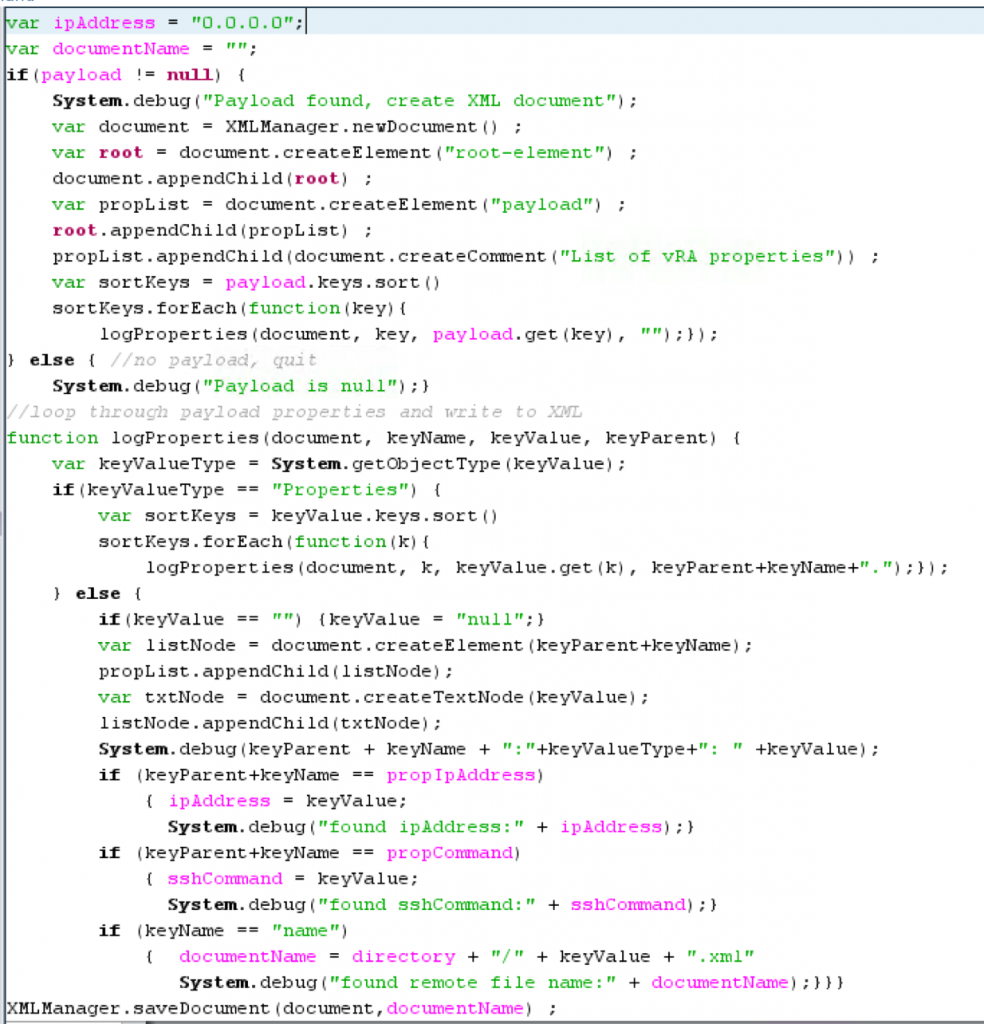

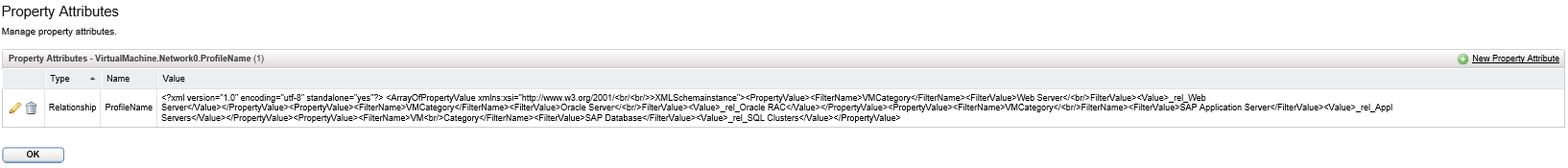

12.1, Extensibility Basics

It’s really difficult to summarize vRA’s extensibility in one or two paragraphs, but i’ll give it a shot. Extensibility refers to any configuration or customization that modifies vRA’s default behavior. This can include customizing the user experience at request time (e.g. adding enhanced configuration options, requiring specific inputs, etc), incorporating ecosystem tools and binding them to a machine’s lifecycle (e.g. load balancers, CMDB/ITSM tools, IPAM, Active Directory, configuration management, and so on).

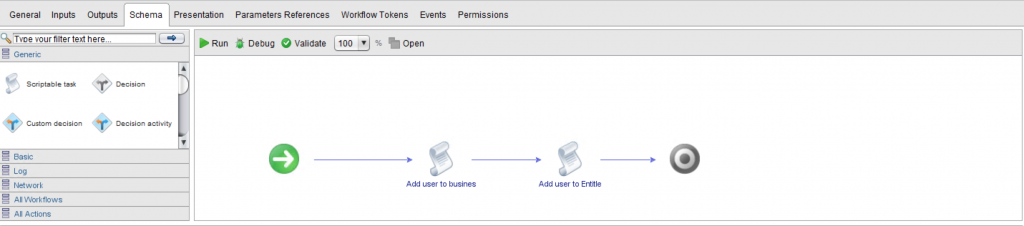

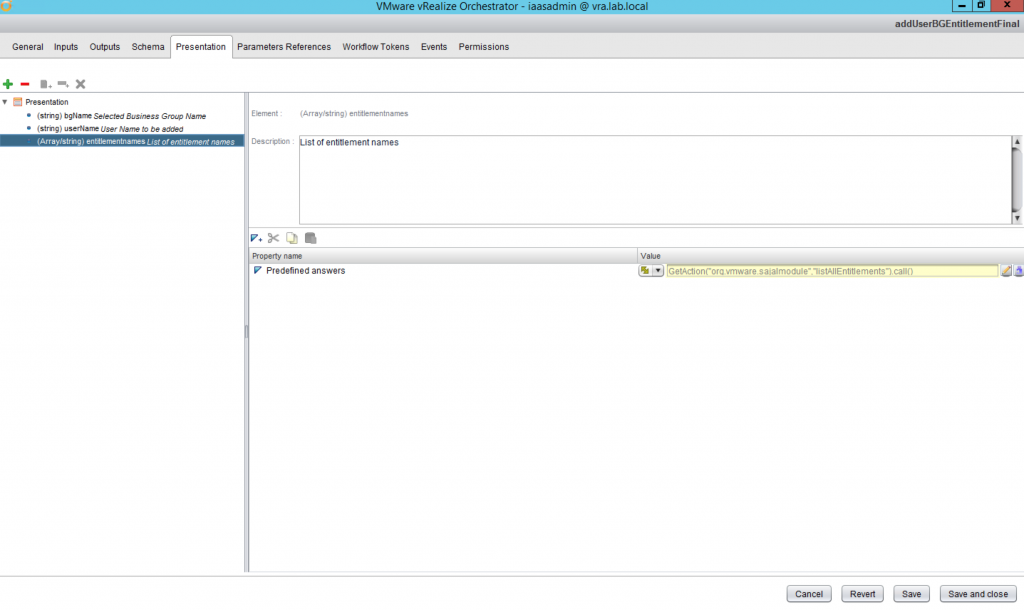

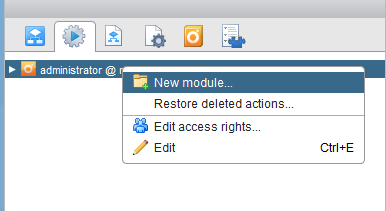

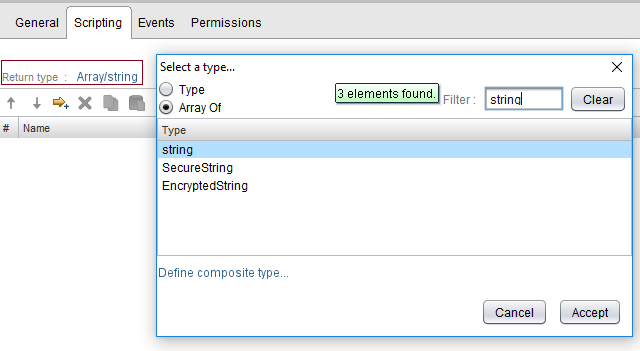

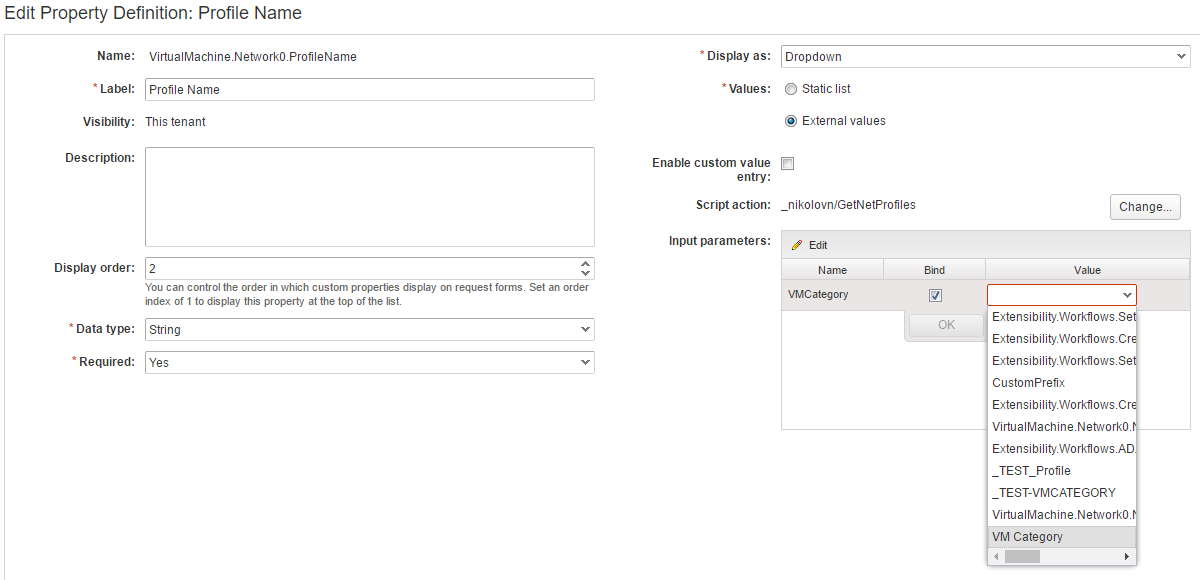

vRA’s vast extensibility capabilities can be as basic or as complex as required. But ultimately they are designed to ensure vRA is plugged in to the broader ecosystem of tools and services based on the business needs. Many lifecycle extensibility services are configured and managed within vRA’s UI (e.g. Property Dictionary, Custom Properties, AD Integration, Event Broker, and XaaS. But one of the most important components of Extensibility is vRealize Orchestrator (vRO), which can be consumed within vRA but managed in it’s own UI (vRO control center, vRO client).

In this module we’ll be getting our feet wet with Extensibility. I’ll provide an overview of vRA’s extensibility tools and usage and an introduction to the Property Dictionary, Custom Properties, and vRealize Orchestrator. I’ll introduce and put to use the Event Broker and XaaS — two critical pieces to vRA’s extensibility — in later modules.

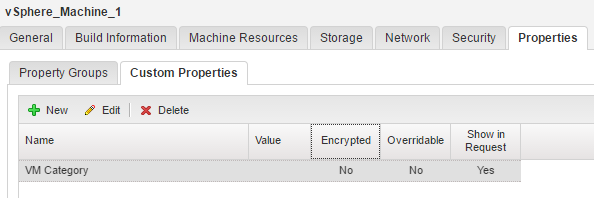

12.2, Simple Extensibility Use Cases

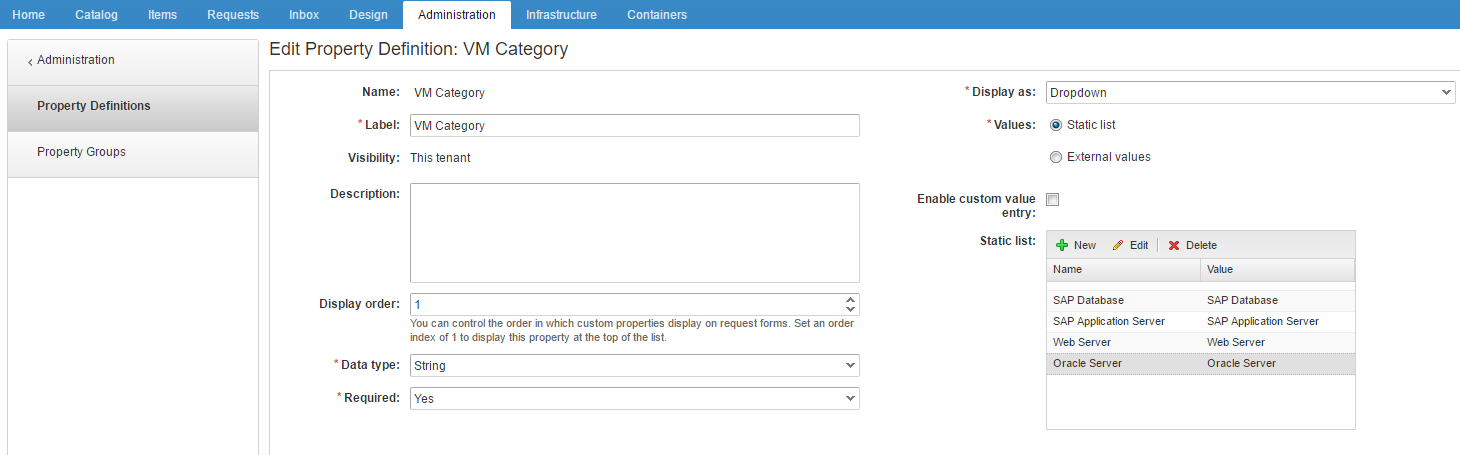

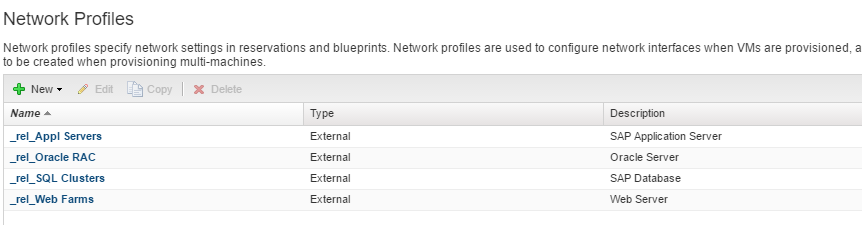

Now that you have a general understanding of vRA’s extensibility capabilities, let’s put some of that knowledge to use. In this module we’ll be leveraging extensibility for some basic extensibility use cases. We’ll use Custom Properties to control vCenter folder placement of provisioned machines, create a Property Definition to provide resource placement options (vis drop-down) at request time, and create Active Directory policies for each Business Group to define where we want machine objects placed in Active Directory.

These are just the basics to get your feet wet. Extensibility will play a big part in many more modules later.

That’s it for now! Now that we’ve got the basics out of the way, the next set of videos will dive into more advanced topics, such as software authoring, container management, SDDC integration (VSAN, NSX), and several advanced extensibility use cases.

Be sure to refer back to the full guide for detailed configuration steps or more info on any given topic.

+++++

@virtualjad

The post vRealize Automation 7.2 Detailed Implementation Video Guide appeared first on VMware Cloud Management.

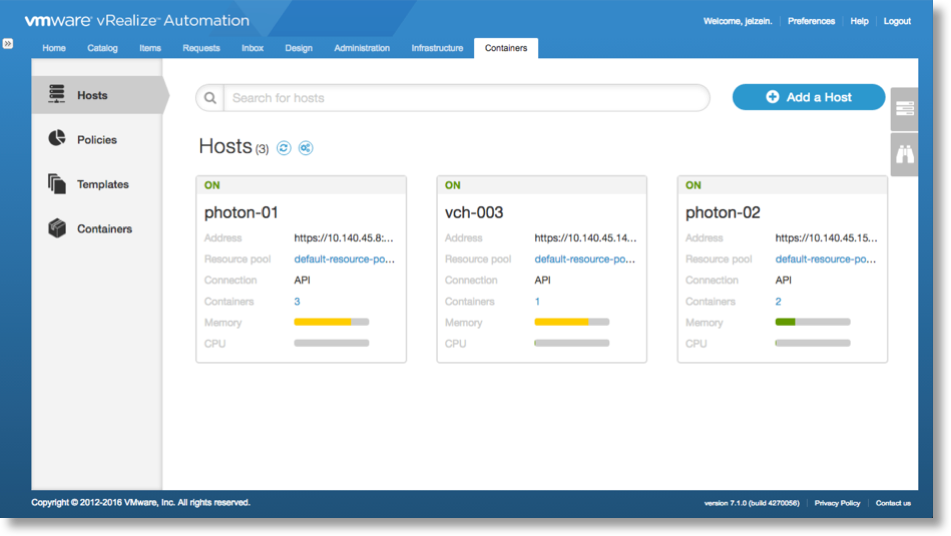

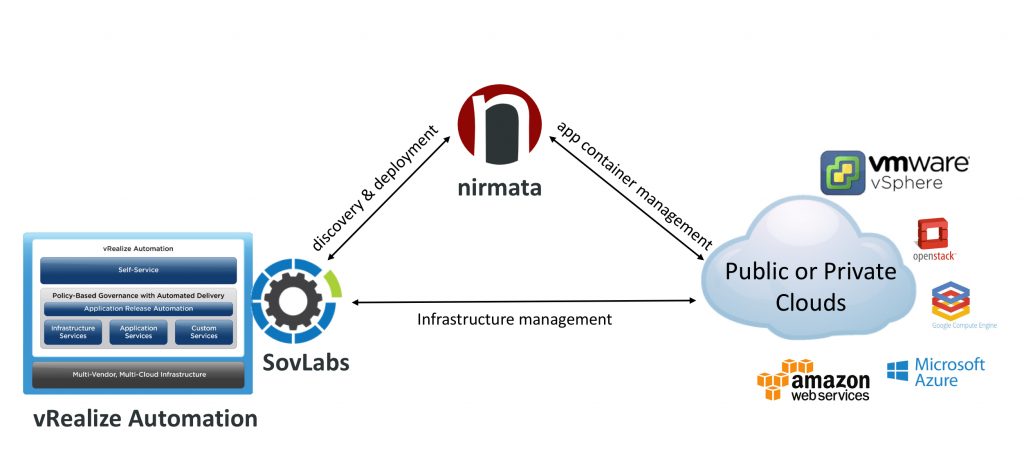

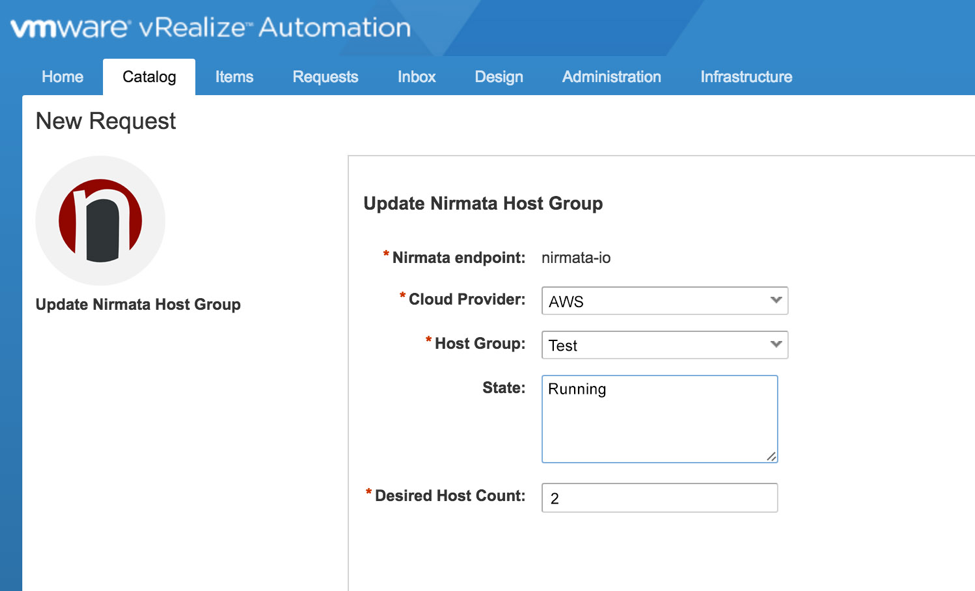

Application teams will have the ability to build hybrid deployments consisting of VMs and containers. Cloud administrators will be able to manage container hosts and apply governance to their usage including capacity quotas or approval workflows.

Application teams will have the ability to build hybrid deployments consisting of VMs and containers. Cloud administrators will be able to manage container hosts and apply governance to their usage including capacity quotas or approval workflows.