With the development of newer and faster load balancers or Application Delivery Controllers (ADC) we are seeing more and more use cases where SSL termination is required. Some of those use cases include SSL offloading and optimization, content caching and filtering, application firewalls and many others. In this guide we will cover the basic SSL-to-SSL configuration of VMware NSX, F5 BIG-IP and Citrix NetScaler with vRealize Automation 7.x.

You have to keep in mind however that the ADCs nowadays provide multiple features and options, while most of them will probably work we cannot cover all possible combinations. Please test any changes in your lab environment before deploying them in production.

SSL offloading

When talking about SSL offloading we usually imagine a connection in which the client-server SSL session is terminated at the ADC and the connection between ADC and back-end systems is not encrypted. This way the burden of encrypting and decrypting the traffic is left to the ADC. We can also do some interesting things at the ADC, such as content rewrite, URL redirection, application firewalling and many more. However, since our traffic is not encrypted to the back-end systems any compromise in our internal network would expose our sensitive information. That is why SSL – Plain mode is not supported by vRA.

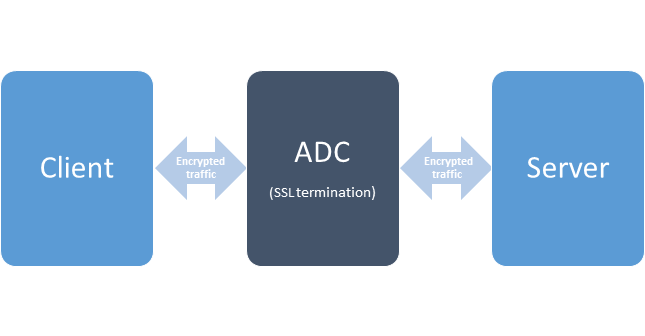

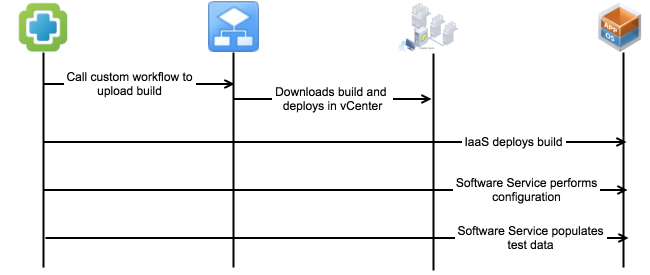

Since SSL – Plain is too risky but we still want the advantages of a modern ADC we can do SSL termination and talk with the back-end systems via encrypted channel. In the case of SSL- SSL mode the connection Client – ADC is encrypted in one SSL session and the connection ADC – back-end server is encrypted in another SSL session. This way we can still achieve performance boost and do advanced content operations but without having the risk of exposing un-encrypted traffic.

This mode can be best described using the following figure:

Multi-arm configuration

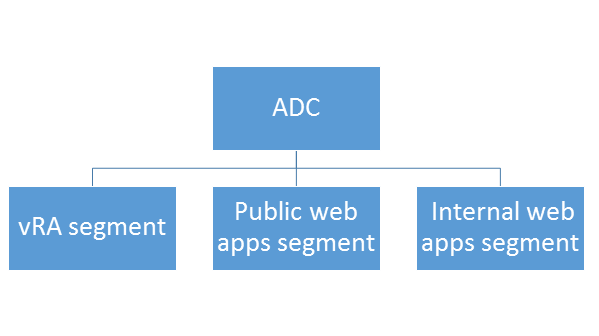

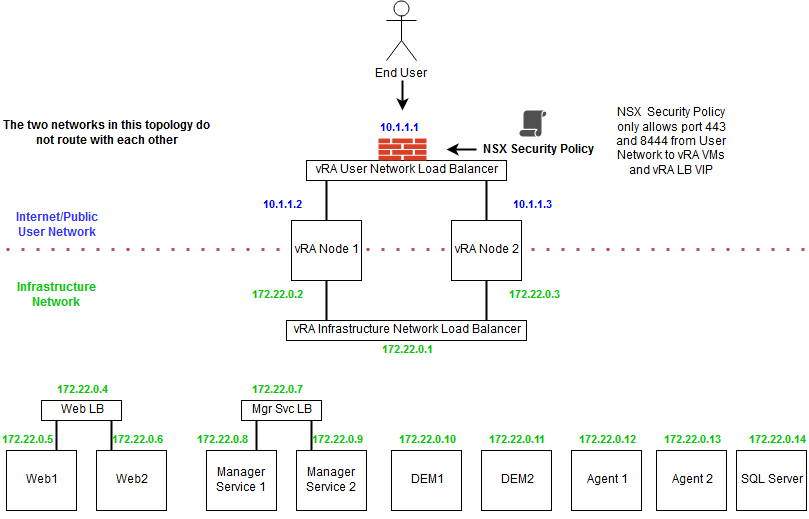

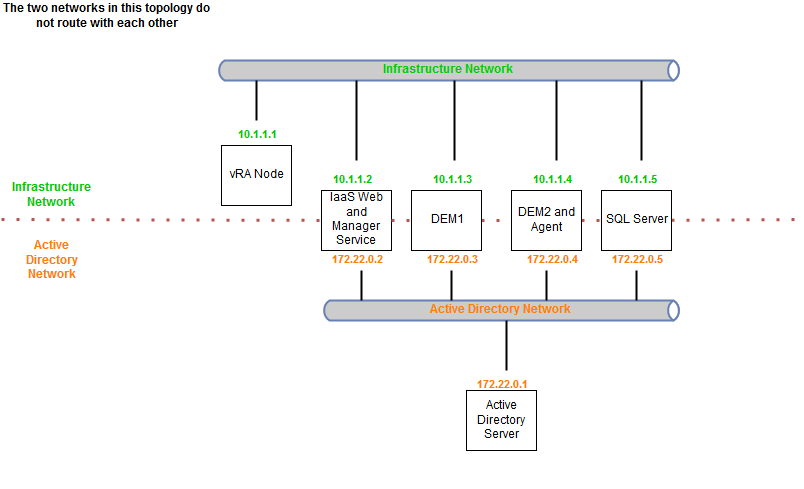

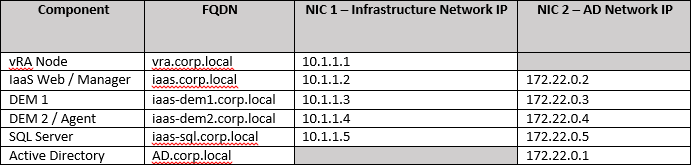

Traditionally vRA deployments are done in one-arm topology. In this topology the ADC and the vRA components sit on the same network. While simple this topology is not always optimal if we want to achieve service isolation. This is why here we will use multi-arm topology where the ADC and vRA components are deployed in different networks.

This topology can be best described using the following figure:

Certificates

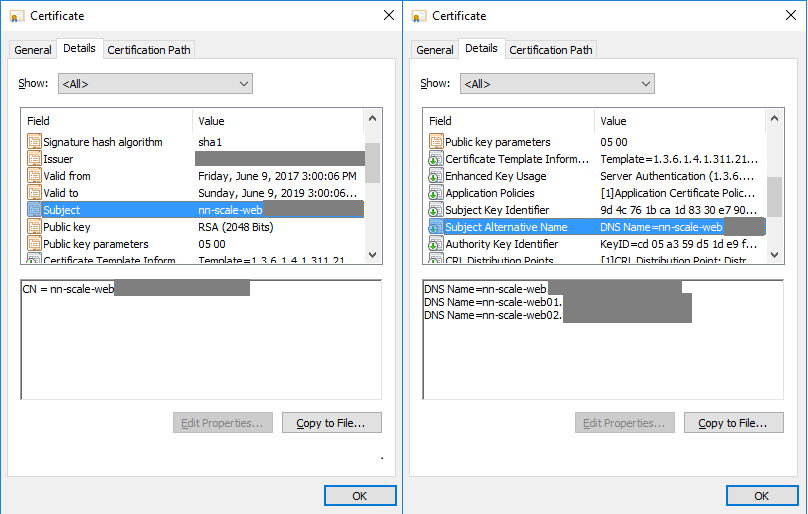

For simplicity in this guide we are going to use the same certificate/private key pair on the ADC and the vRA components. It is possible to use different certificates, however since some of the vRA internal communication is done via the ADC you have to make sure that the vRA components trust the ADC certificate.

When issuing your certificate make sure that it includes the following attributes:

Common Name (CN) = the ADC virtual server used for the vRA appliances

Subject Alternative Name (SAN) = the ADC virtual servers for IaaS Web, IaaS Manager, DNS names for all vRA components, IPs for all ADC virtual servers and all vRA components

Example:

| Type | Component | Record |

|---|---|---|

| CN | ADC for vRA appliances | vralb.example.com |

| SAN | ADC for IaaS Web and Manager | weblb.example.com, mgrlb.example.com |

| SAN | vRA appliances | vra01.example.com, vra02.example.com |

| SAN | IaaS machines | web01.example.com, web02.example.com mgr01.example.com, mgr02.example.com |

| SAN | All IPs of both ADC and vRA machines | 10.23.89.101, 10.23.90.223 10.23.90.224, 10.23.89.102 10.23.90.226, 10.23.90.227 10.23.89.103, 10.23.90.228 10.23.90.229 |

More information about vRealize Automation certificates can be found here.

But enough theory, in the next section we will learn how to configure NSX, NetScaler and BIG-IP with vRA 7.

In our lab we used the following product versions: NSX=6.2, 6.3, NetScaler=11.0, BIG-IP=11.6, vRA=7.2, 7.3

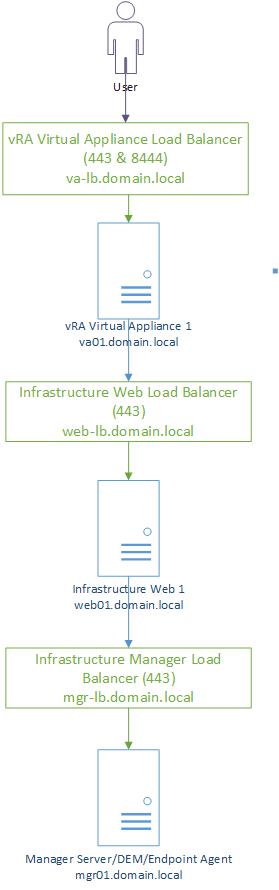

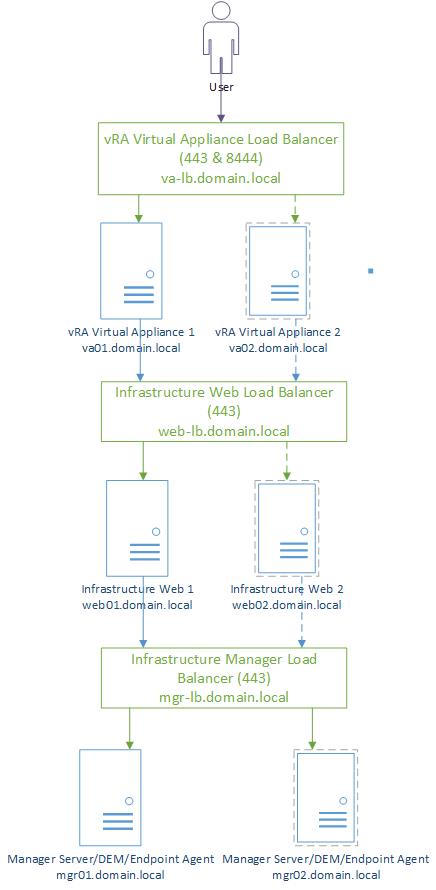

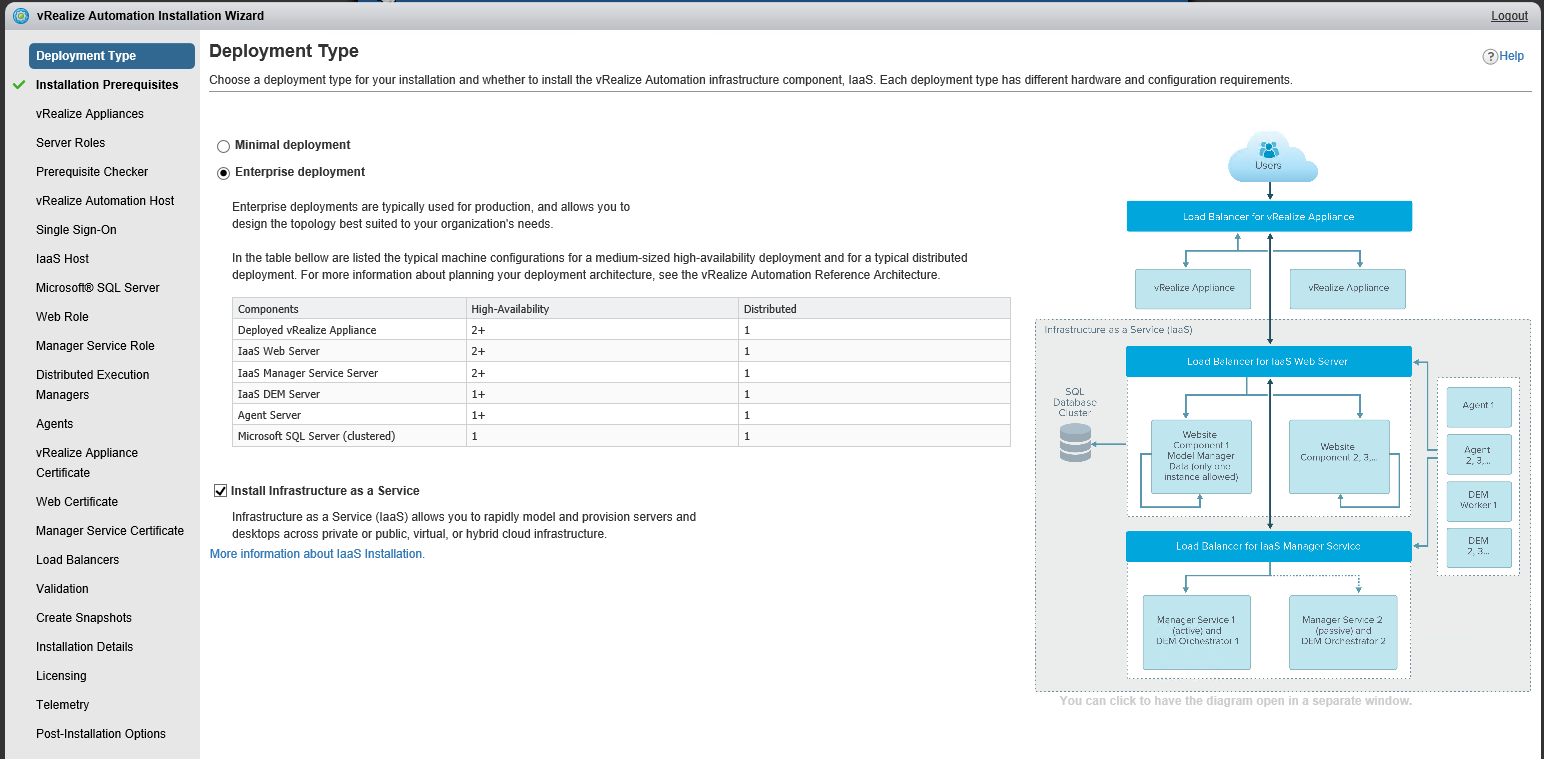

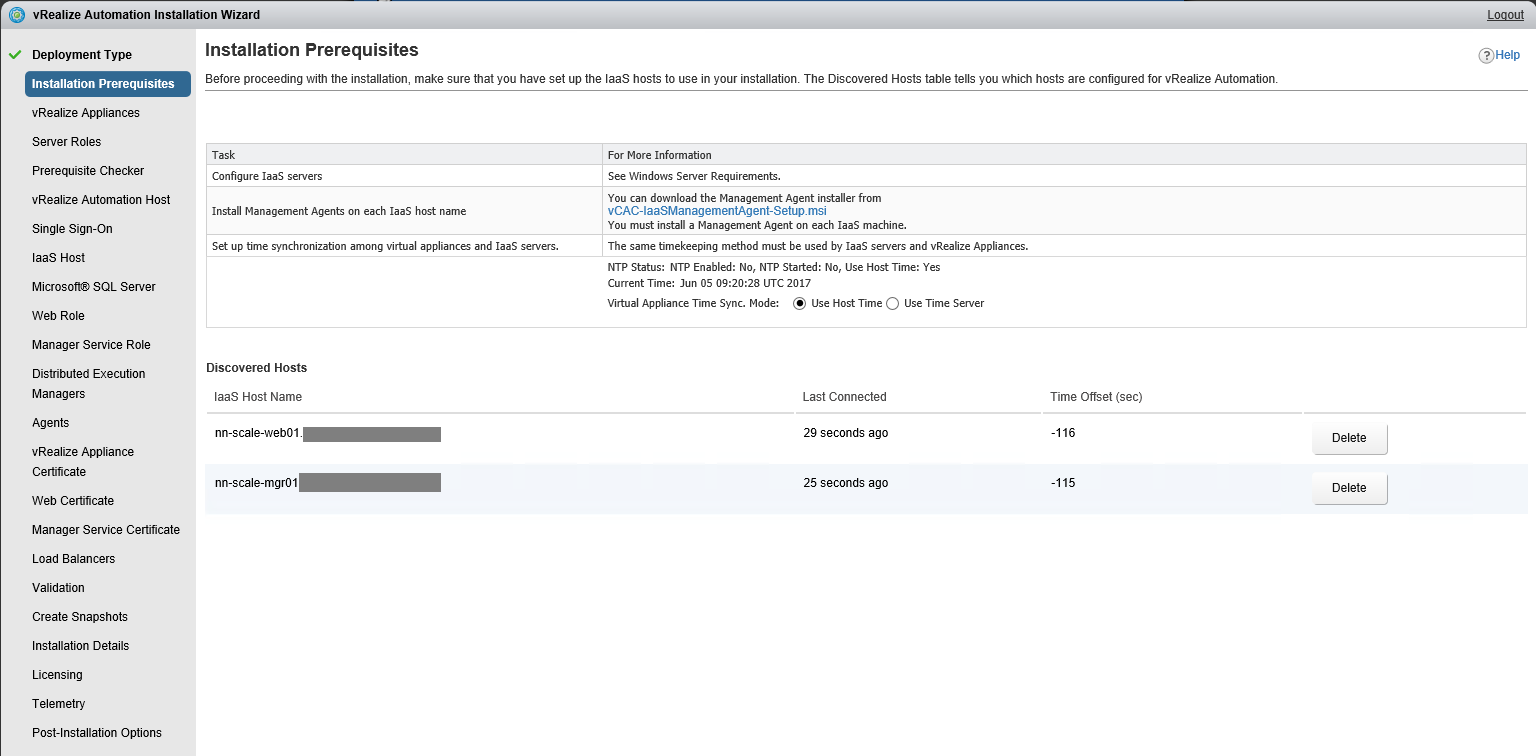

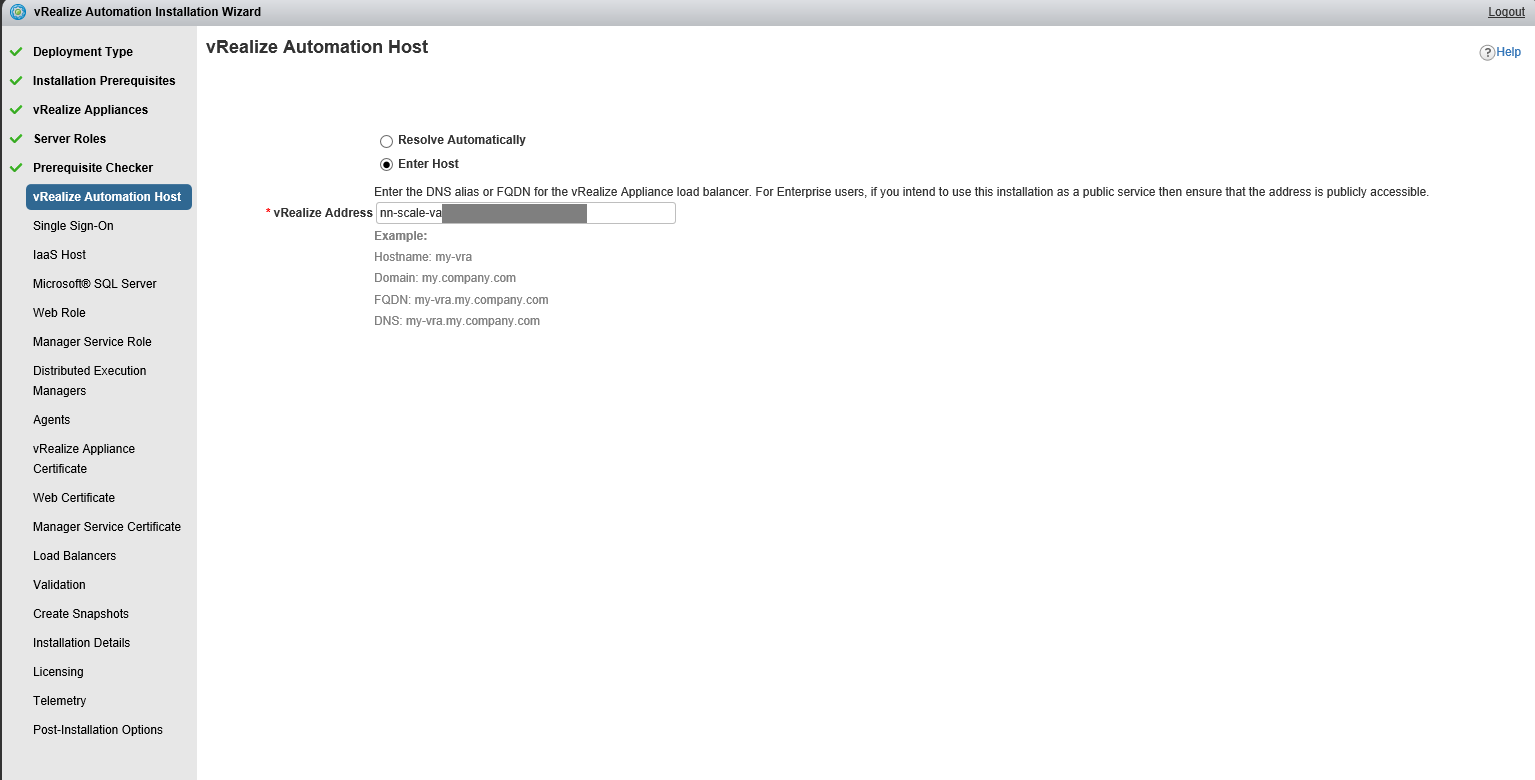

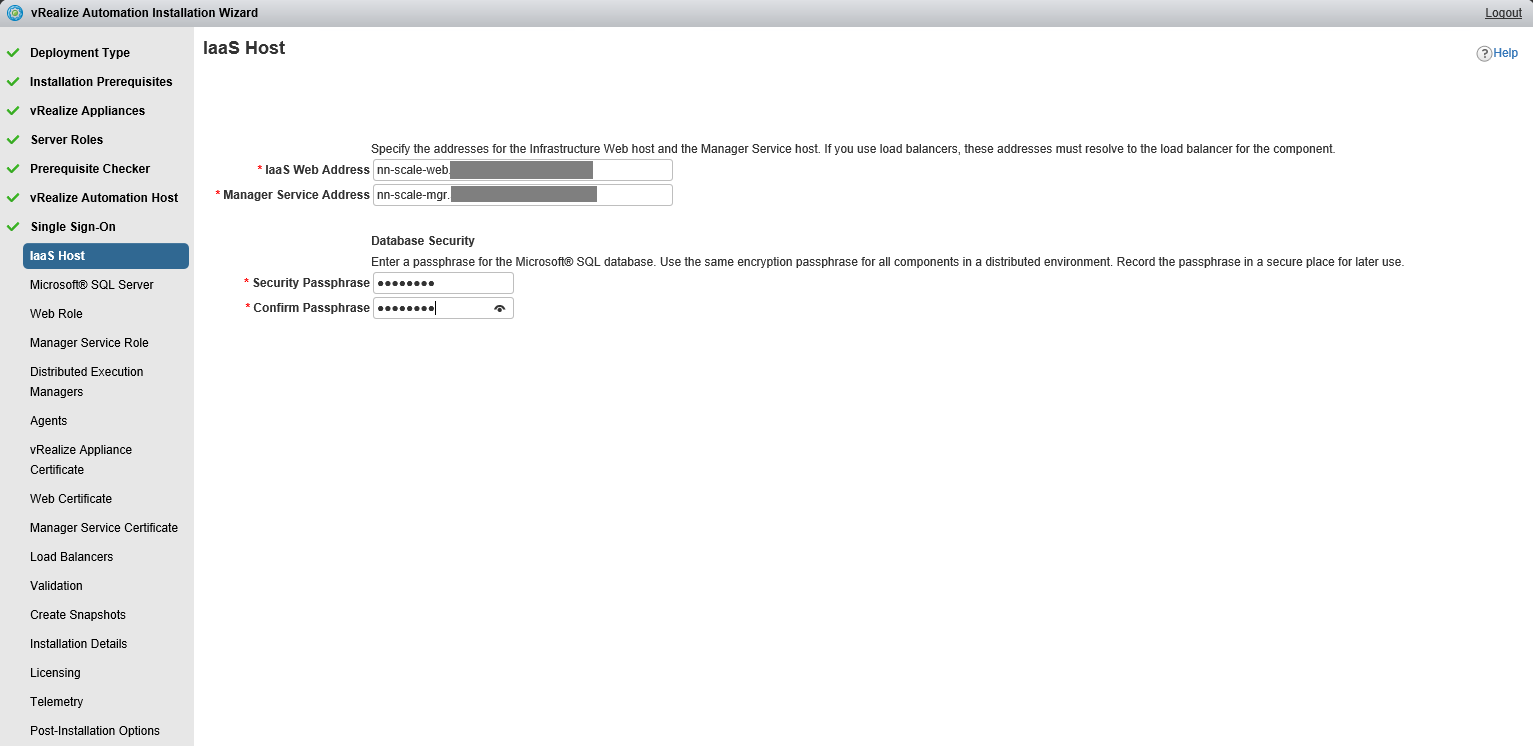

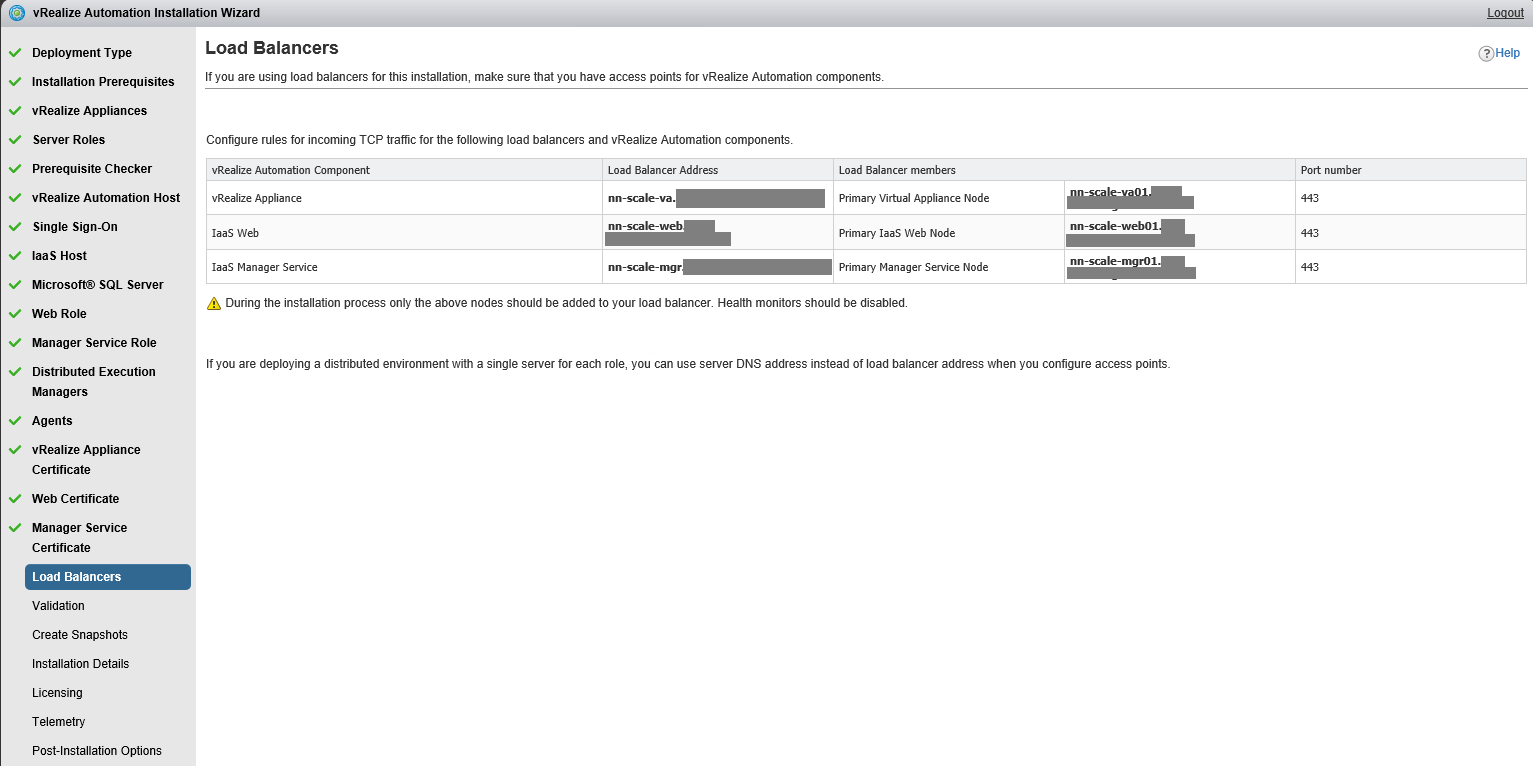

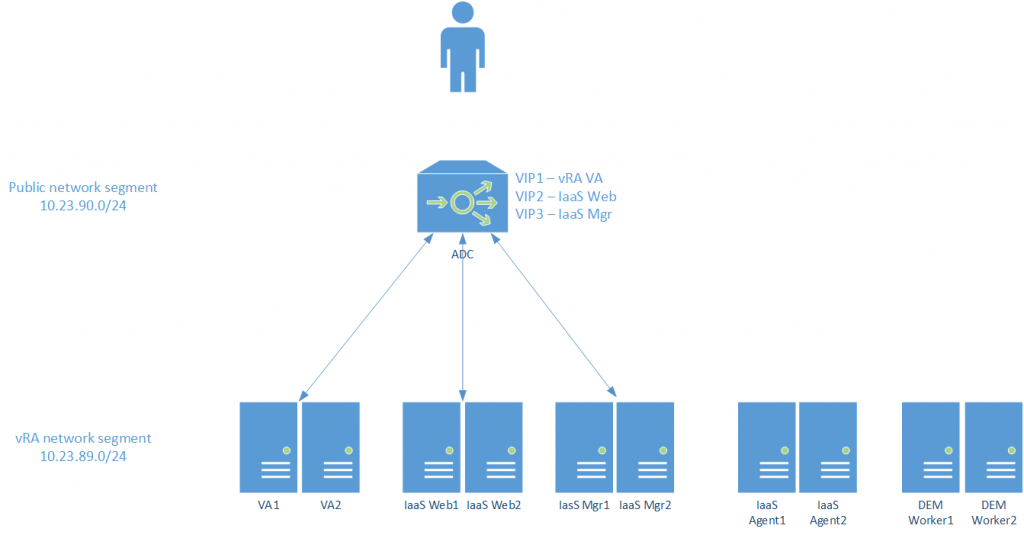

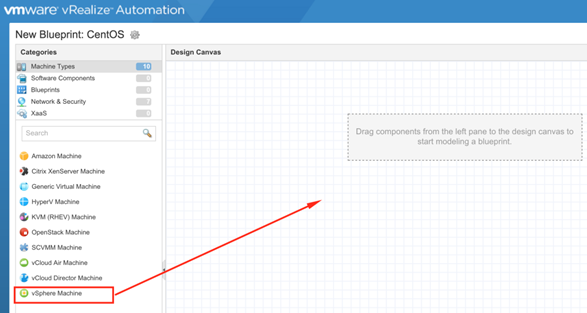

In order to better understand the references below here is a diagram of our vRA deployment

Configuring Citrix NetScaler

- Configure the NetScaler device

Enable the Load Balancer(LB) and SSL modules.

You can do so from the NetScaler > System > Settings > Configure Basic Features page.

- Upload your certificate and private key

Go to NetScaler > Traffic Management > SSL > SSL Certificates

Click Install and upload your certificate chain + private key.

- Configure monitors

Go to NetScaler > Traffic Management > Load Balancing > Monitors

Click Add and provide the required information. Leave the default when nothing is specified.

Add the following monitors:

| Name | Type | Interval | Timeout | Send String | Receive String | Dest. Port | Secure |

|---|---|---|---|---|---|---|---|

| vra_https_va_web | HTTP | 5 seconds | 4990 milliseconds | GET /vcac/services/api/ health |

HTTP/1\.(0|1) (200|204) | 443 | yes |

| vra_https_iaas_web | HTTP-ECV | 5 seconds | 4990 milliseconds | GET /wapi/api/status/web | REGISTERED | 443 | yes |

| vra_https_iaas_mgr | HTTP-ECV | 5 seconds | 4990 milliseconds | GET /VMPSProvision | ProvisionService | 443 | yes |

- Configure service groups

Go to NetScaler > Traffic Management > Load Balancing > Service Groups

Click Add and provide the required information. Leave the default when nothing is specified.

Enter each pool member as a Member and add it to the New Members type Server Based.

Add the following service groups:

| Name | Health Monitors | Protocol | SG Members | Address | Port |

|---|---|---|---|---|---|

| pl_vra-va-00_443 | vra_https_va_web | SSL | ra-vra-va-01 | 10.23.90.223 | 443 |

| ra-vra-va-02 | 10.23.90.224 | 443 | |||

| pl_iaas-web-00_443 | vra_https_iaas_web | SSL | ra-web-01 | 10.23.90.226 | 443 |

| ra-web-02 | 10.23.90.227 | 443 | |||

| pl_iaas-man-00_443 | vra_https_iaas_mgr | SSL | ra-man-01 | 10.23.90.228 | 443 |

| ra-man-02 | 10.23.90.229 | 443 | |||

| pl_vra-va-00_8444 | vra_https_va_web | SSL | ra-vra-va-01 | 10.23.90.223 | 8444 |

| ra-vra-va-02 | 10.23.90.224 | 8444 |

- Configure virtual servers

Go to NetScaler > Traffic Management > Load Balancing > Virtual Servers

Click Add and provide the required information. Leave the default when nothing is specified.

Add the following virtual servers:

| Name | Protocol | Dest. Address | Port | Load Balancing Method | Service Group Binding | Certificate |

|---|---|---|---|---|---|---|

| vs_vra-va-00_443 | SSL | 10.23.89.101 | 443 | Roundrobin | pl_vra-va-00_443 | Select the appropriate certificate |

| vs_web-00_443 | SSL | 10.23.89.102 | 443 | Roundrobin | pl_iaas-web-00_443 | Select the appropriate certificate |

| vs_man-00_443 | SSL | 10.23.89.103 | 443 | Roundrobin | pl_iaas-man-00_443 | Select the appropriate certificate |

| vs_vra-va-00_8444 | SSL | 10.23.89.101 | 8444 | Roundrobin | pl_vra-va-00_8444 | Select the appropriate certificate |

- Configure Persistence Profile

Go to NetScaler and select NetScaler > Traffic Management > Load Balancing > Persistency Groups

Click Add and enter the name source_addr_vra then select Persistence > SOURCEIP from the drop-down menu.

Set the Timeout to 30 minutes.

Add all related Virtual Servers.

Click OK.

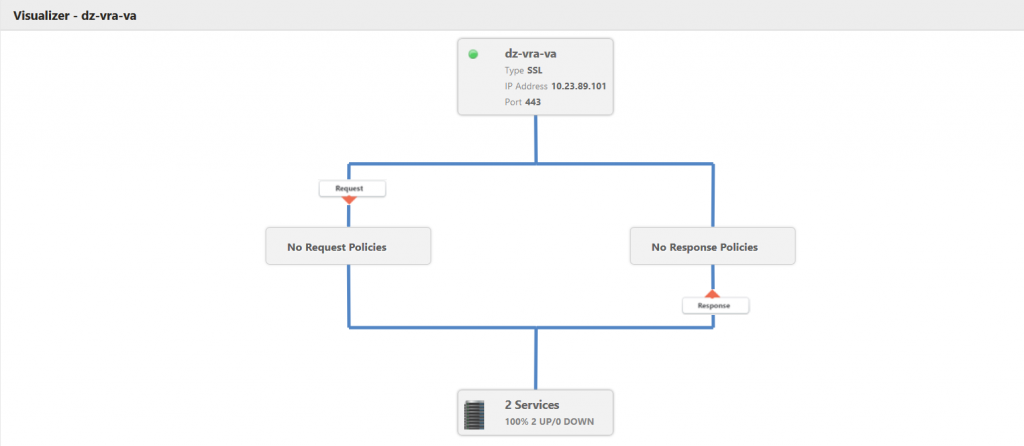

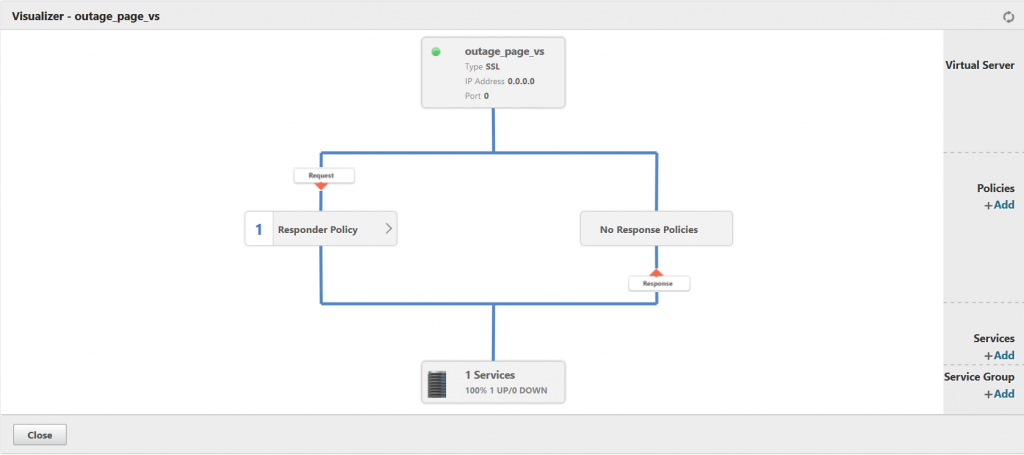

If everything is configured correctly you should see the following for every virtual server in LB Visualizer

Configuring F5 BIG-IP

- Upload certificate and key pair

Navigate to System > File Management > SSL Certificate List

Click Import and select the certificate chain + private key.

Input the same name for the certificate and the key that way the device will know which key to use for the certificate.

- Configure SSL profile

Navigate to Local Traffic > Profiles > SSL > Client

Click Create

For Parent Profile select clientssl

Input Name – example vra_profile-client

Click the checkbox on Certificate, Chain, Key and select the correct certificate chain and key.

- Configure custom persistence profile

Navigate to Local Traffic > Profiles > Persistence.

Click Create.

Enter the name source_addr_vra and select Source Address Affinity from the drop-down menu.

Enable Custom mode.

Set the Timeout to 1800 seconds (30 minutes).

Click Finished.

- Configure monitors

Navigate to Local Traffic > Monitors.

Click Create and provide the required information. Leave the default when nothing is specified.

Create the following monitors:

| Name | Type | Interval | Timeout | Send String | Receive String | Alias Service Port |

|---|---|---|---|---|---|---|

| vra_https_va_web | HTTPS | 3 | 10 | GET /vcac/services/api/ health\r\n |

HTTP/1\.(0|1) (200|204) | 443 |

| vra_https_iaas_web | HTTPS | 3 | 10 | GET /wapi/api/status/web\r\n | REGISTERED | |

| vra_https_iaas_mgr | HTTPS | 3 | 10 | GET /VMPSProvision\r\n | ProvisionService |

- Configure pools

Navigate to Local Traffic > Pools.

Click Create and provide the required information. Leave the default when nothing is specified.

Enter each pool member as a New Node and add it to the New Members.

Create the following pools:

| Name | Health Monitors | Load Balancing Method | Node name | Address | Service Port |

|---|---|---|---|---|---|

| pl_vra-va-00_443 | vra_https_va_web | Round Robin | ra-vra-va-01 | 10.26.90.223 | 443 |

| ra-vra-va-02 | 10.26.90.224 | 443 | |||

| pl_iaas-web-00_443 | vra_https_iaas_web | Round Robin | ra-web-01 | 10.26.90.226 | 443 |

| ra-web-02 | 10.26.90.227 | 443 | |||

| pl_iaas-man-00_443 | vra_https_iaas_mgr | Round Robin | ra-man-01 | 10.26.90.228 | 443 |

| ra-man-02 | 10.26.90.229 | 443 | |||

| pl_vra-va-00_8444 | vra_https_va_web | Round Robin | ra-vra-va-01 | 10.26.90.223 | 8444 |

| ra-vra-va-02 | 10.26.90.224 | 8444 |

- Configure virtual servers

Navigate to Local Traffic > Virtual Servers.

Click Create and provide the required information. Leave the default when nothing is specified.

Create the following virtual servers:

| Name | Type | Dest. Address | Service port | SSL profile (client) | SSL Profile (Server) | Source address translation | Default pool | Default persistence profile |

|---|---|---|---|---|---|---|---|---|

| vs_vra-va-00_443 | Standard | 10.26.89.101 | 443 | vra-profile-client | serverssl | Auto Map | pl_vra-va-00_443 | source_addr_vra |

| vs_web-00_443 | Standard | 10.26.89.102 | 443 | vra-profile-client | serverssl | Auto Map | pl_iaas-web-00_443 | source_addr_vra |

| vs_man-00_443 | Standard | 10.26.89.103 | 443 | vra-profile-client | serverssl | Auto Map | pl_iaas-man-00_443 | None |

| vs_vra-va-00_8444 | Standard | 10.26.89.101 | 8444 | vra-profile-client | serverssl | Auto Map | pl_vra-va-00_8444 | source_addr_vra |

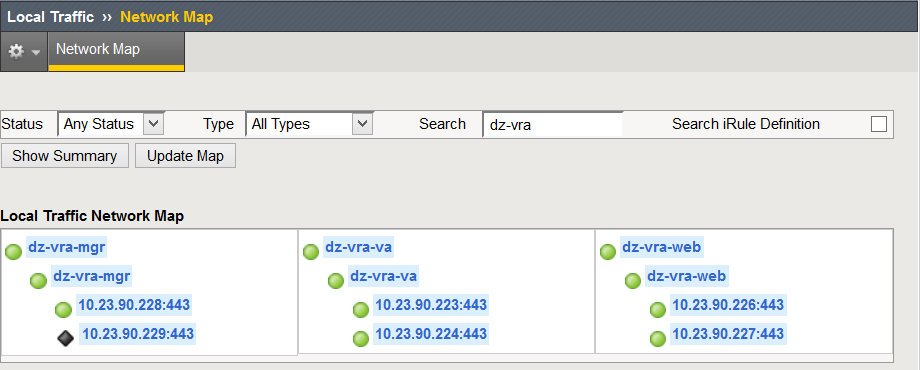

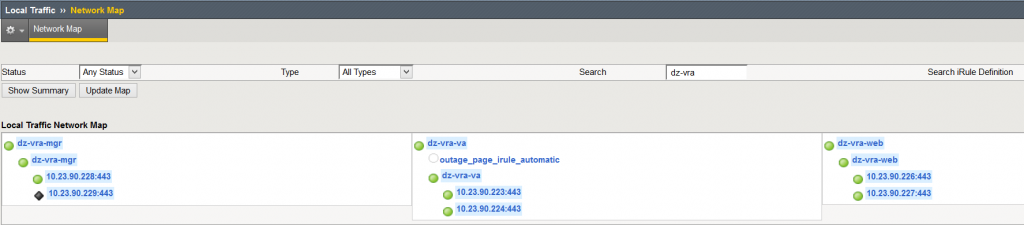

If everything is setup correctly you should see the following in Local Traffic › Network Map

Configuring VMware NSX

- Configure Global Settings

Log in to the NSX, select the Manage tab, click Settings, and select Interfaces.

Double-click to select your Edge device from the list.

Click vNIC# for the external interface that hosts the VIP IP addresses and click the Edit icon.

Select the appropriate network range for the NSX Edge and click the Edit icon.

Add the IP addresses to be assigned to the VIPs, and click OK.

Click OK to exit the interface configuration subpage.

- Enable load balancer functionality

Select the Load Balancer tab and click the Edit icon.

Select Enable Load Balancer, Enable Acceleration, and Logging, if required, and click OK.

- Upload certificate chain and key

Go to Manage > Settings > Certificates and upload the certificate chain + private key

- Add application profiles

Click Application Profiles on the window pane on the left.

Click the Add icon to create the Application Profiles required for vRealize Automation by using the information from the table below. Leave the default when nothing is specified.

| Name | Type | Enable SSL Pass-through | Configure Service Certificate | Virtual Server Certificate | Timeout | Persistence |

|---|---|---|---|---|---|---|

| IaaS Manager | HTTPS | Deselected | Selected | Select the correct certificate | – | None |

| IaaS Web | HTTPS | Deselected | Selected | Select the correct certificate | 1800 seconds | Source IP |

| vRealize Automation VA Web | HTTPS | Deselected | Selected | Select the correct certificate | 1800 seconds | Source IP |

- Add service monitors

Click Service Monitoring in the left pane.

Click the Add icon to create the Service Monitors required for vRealize Automation using the information from the table below. Leave the default when nothing is specified.

| Name | Interval | Timeout | Retries | Type | Method | URL | Receive | Expected |

|---|---|---|---|---|---|---|---|---|

| vRealize Automation VA Web | 3 | 10 | 3 | HTTPS | GET | /vcac/services/api/health | 200, 204 | |

| IaaS Web | 3 | 10 | 3 | HTTPS | GET | /wapi/api/status/web | REGISTERED | |

| IaaS Manager | 3 | 10 | 3 | HTTPS | GET | /VMPSProvision | ProvisionService |

- Add pools

Click Pools in the left pane.

Click the Add icon to create the Pools required for vRealize Automation using the information from the table below. Leave the default when nothing is specified.

You can either use the IP address of the pool members, or select them as a Virtual Center Container.

| Pool Name | Algorithm | Monitors | Member Name | Example IP Address / vCenter Container | Port | Monitor Port |

|---|---|---|---|---|---|---|

| pool_vra-va-web_443 | Round Robin | vRA VA Web | vRA VA1 | 10.26.90.223 | 443 | |

| vRA VA2 | 10.26.90.224 | 443 | ||||

| pool_iaas-web_443 | Round Robin | IaaS Web | IaaS Web1 | 10.26.90.226 | 443 | |

| IaaS Web2 | 10.26.90.227 | 443 | ||||

| pool_iaas-manager_443 | Round Robin | IaaS Manager | IaaS Man1 | 10.26.90.228 | 443 | |

| IaaS Man2 | 10.26.90.229 | 443 | ||||

| pool_vra-rconsole_8444 | Round Robin | vRA VA Web | vRA VA1 | 10.26.90.223 | 8444 | 443 |

| vRA VA2 | 10.26.90.224 | 8444 | 443 |

- Add virtual servers

Click Virtual Servers on the left pane.

Click the Add icon to create the Virtual Servers required for vRealize Automation using the information from the table below. Leave the default when nothing is specified.

| Name | IP Address | Protocol | Port | Default Pool | Application Profile | Application Rule |

|---|---|---|---|---|---|---|

| vs_vra-va-web_443 | 10.26.90.101 | HTTPS | 443 | pool_vra-va-web_ 443 |

vRA VA | |

| vs_iaas-web_443 | 10.26.90.102 | HTTPS | 443 | pool_iaas-web_ 443 |

IaaS Web | |

| vs_iaas-manager_443 | 10.26.90.103 | HTTPS | 443 | pool_iaas-manager _443 |

IaaS Manager | |

| vs_vra-va-rconsole_8444

|

10.26.90.101 | HTTPS | 8444 | pool_vra-rconsole _8444 |

vRA VA |

You can read more about the supported deployment scenarios for vRA in the official Load Balancing Guide which covers configuring vRA with the use of SSL pass-trough load-balancing.

In conclusion this guide is just scratching the surface of what you can accomplish by using SSL terminating ADC, but is good foundation on which you can build your complete integration.

If you are interested in more articles like this one stay tuned on VMware Blogs.

Take a vRealize Automation 7 Hands-On lab!

The post How to use vRealize Automation with SSL offloading in multi-arm load-balancing topology appeared first on VMware Cloud Management.

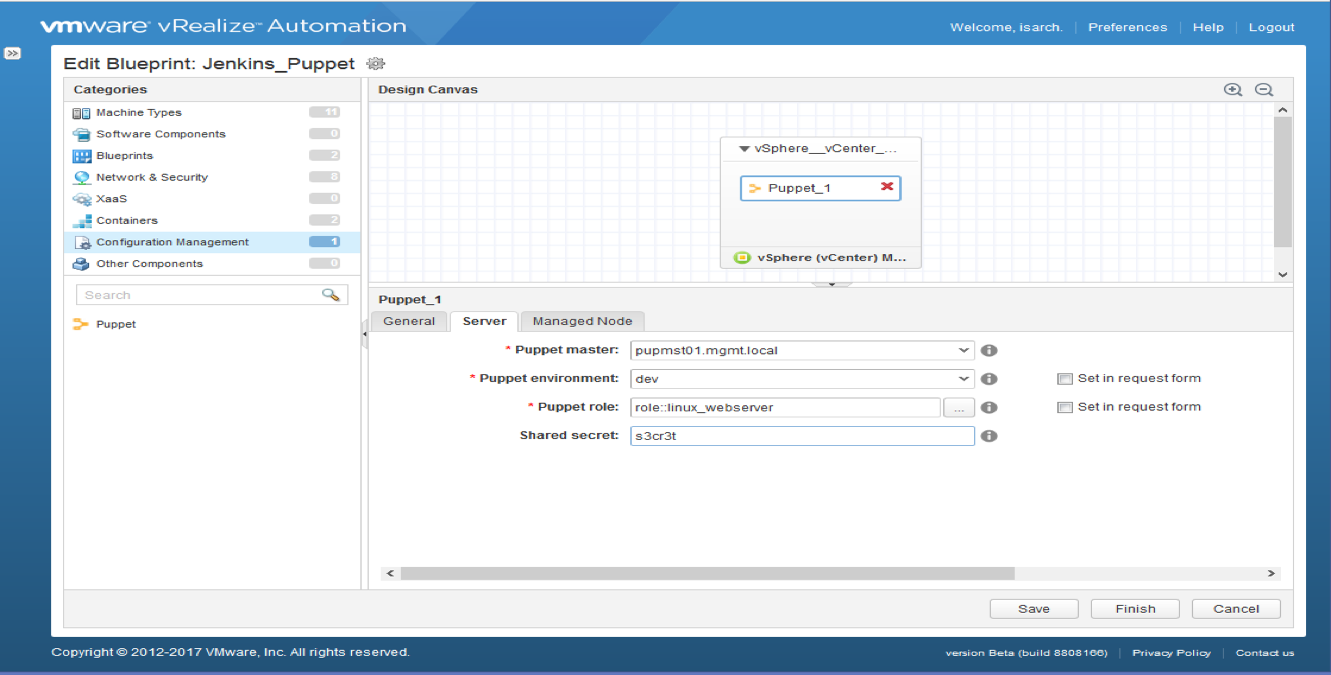

Agility is the key to accelerate digital transformation. For an IT team, this can be translated as the capability to quickly serve application developers so as to address their infrastructure, operating system, middleware, and other service needs. vRealize Automation delivers agility through enabling self-service workflows while integrating into existing IT ecosystem tools and processes. Sound ambitious? vRealize Automation makes it happen through its uniquely extensible platform, that integrates with and can cover the gamut of ecosystem tooling prevalent among our customers. Puppet, as one of the leading vendors in configuration management domain, demonstrates how VMware and our ecosystem partners together bring the best-of-breed to continuously create more value for our joint customers. Congratulations once again to Puppet and we look forward to more innovative joint solutions!

Agility is the key to accelerate digital transformation. For an IT team, this can be translated as the capability to quickly serve application developers so as to address their infrastructure, operating system, middleware, and other service needs. vRealize Automation delivers agility through enabling self-service workflows while integrating into existing IT ecosystem tools and processes. Sound ambitious? vRealize Automation makes it happen through its uniquely extensible platform, that integrates with and can cover the gamut of ecosystem tooling prevalent among our customers. Puppet, as one of the leading vendors in configuration management domain, demonstrates how VMware and our ecosystem partners together bring the best-of-breed to continuously create more value for our joint customers. Congratulations once again to Puppet and we look forward to more innovative joint solutions!